OpenAI’s latest gpt-oss-20b mannequin lets your Mac run ChatGPT-style AI with no subscription, no web, and no strings connected. This is methods to get began.

On August 5 OpenAI launched its first open-weight massive language fashions in years, permitting Mac customers to run ChatGPT-style instruments offline. With the precise setup, many Apple Silicon Macs can now deal with superior AI processing with no subscription or web connection.

Working a strong AI mannequin on a Mac as soon as required paying for a cloud service or navigating advanced server software program. The brand new gpt-oss-20b and gpt-oss-120b fashions change that.

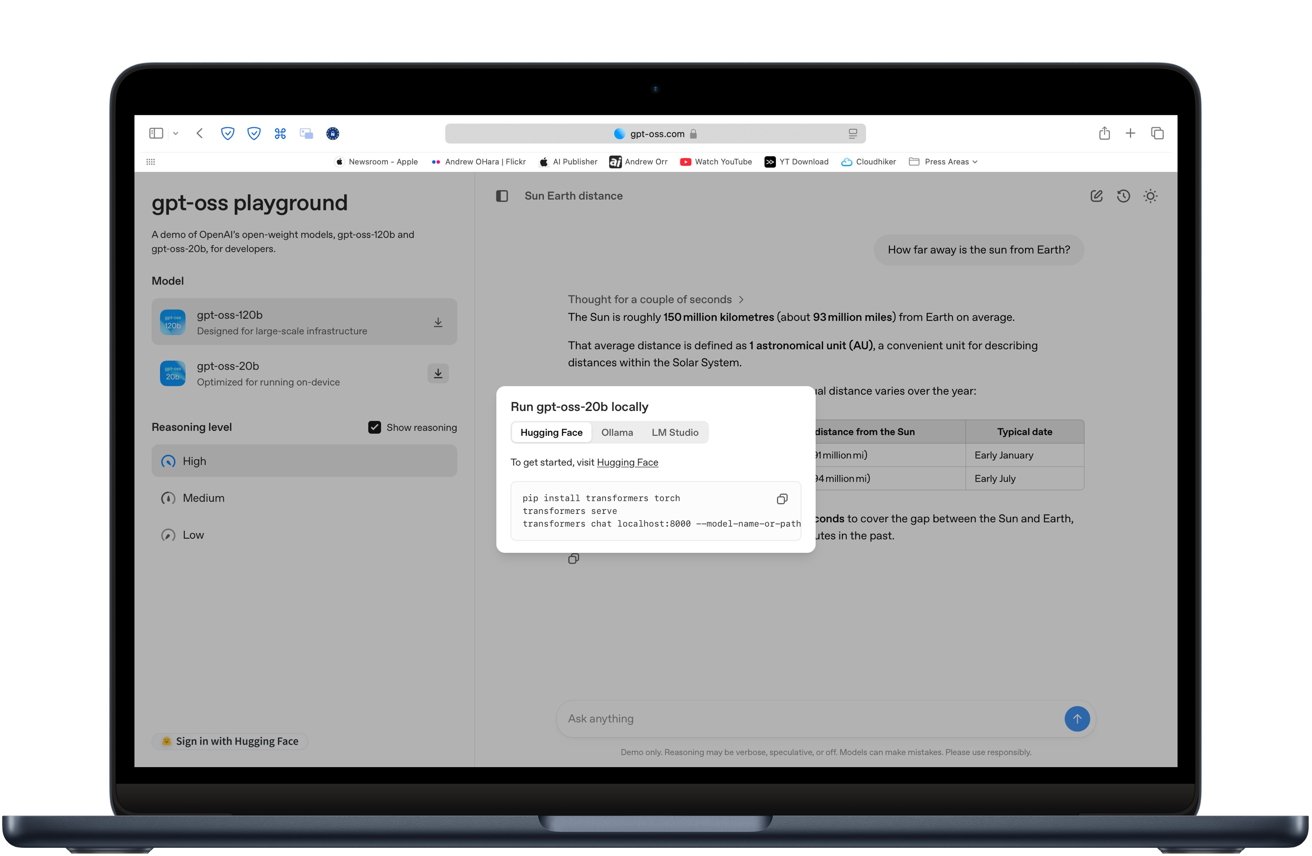

The fashions provide downloadable weights that work with widespread local-AI instruments like LM Studio and Ollama.

You may strive the mannequin in your browser earlier than downloading something by visiting gpt-oss.com. The positioning gives a free demo of every mannequin so you possibly can see the way it handles writing, coding, and common questions.

What you should run it

We advocate at the very least an M2 chip, and 16GB of RAM. Extra is best. In case you have an M1 processor, we advocate the Max or Extremely. A Mac Studio is a superb selection for this, due to the additional cooling.

The mannequin struggled a bit on our MacBook Air with an M3 chip. As you’d anticipate, it heated up too.

Consider it like gaming on the Mac. You are able to do it, however it may be demanding.

To get began, you will want certainly one of these instruments:

- LM Studio — a free app with a visible interface

- Ollama — a command-line software with mannequin administration

- MLX — Apple’s machine studying framework, utilized by each apps for acceleration

These apps deal with mannequin downloads, setup, and compatibility checks.

Utilizing Ollama

Ollama is a light-weight software that permits you to run native AI fashions from the command line with minimal setup.

- Set up Ollama by following the directions at ollama.com.

- Open Terminal and run

ollama run gpt-oss-20bto obtain and launch the mannequin. - Ollama will deal with the setup, together with downloading the precise quantized model.

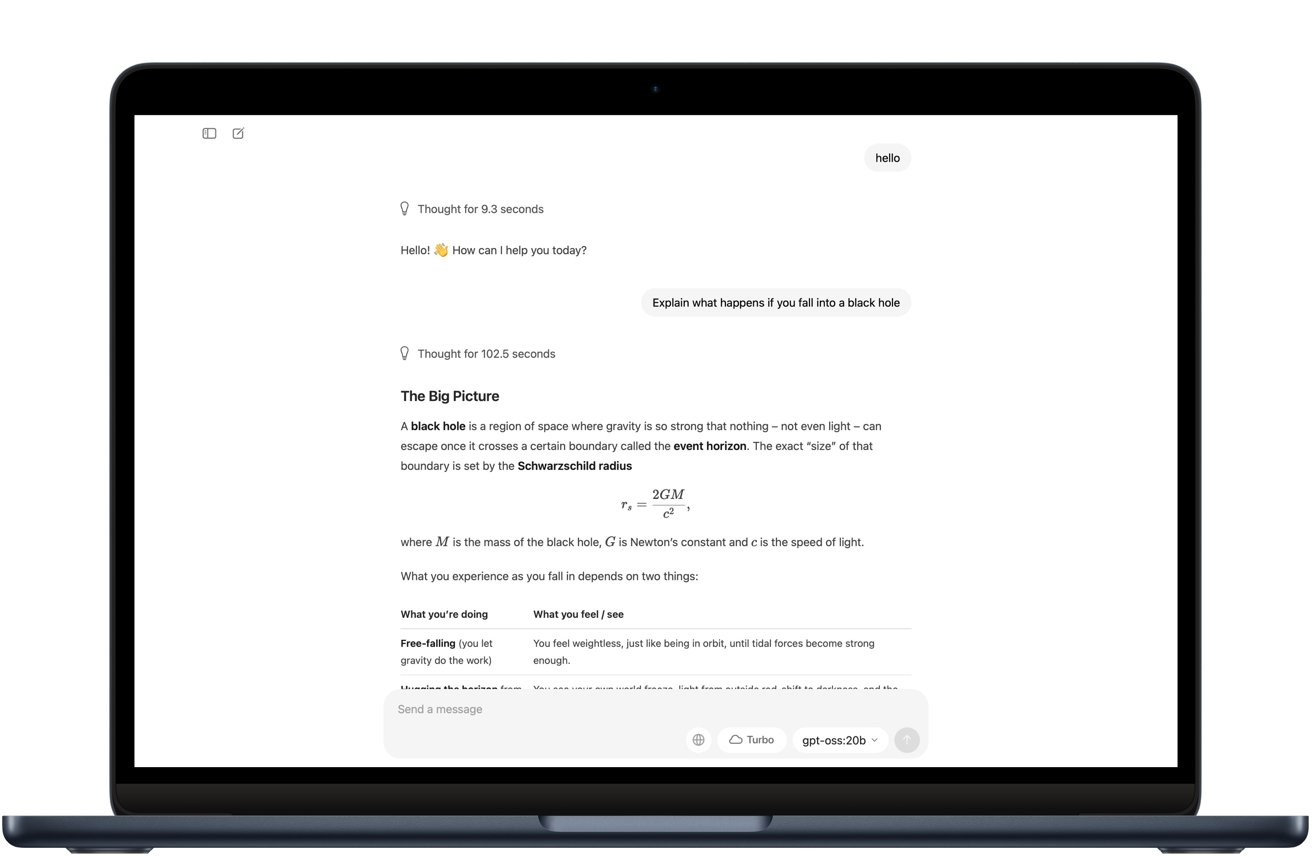

- As soon as it finishes loading, you will see a immediate the place you can begin chatting straight away.

It really works similar to ChatGPT, however all the things runs in your Mac without having an web connection. In our take a look at, the obtain was about 12 GB, so your Wi-Fi velocity will decide how lengthy that step takes.

On a MacBook Air with an M3 chip and 16 GB of RAM, the mannequin ran, however responses to questions took noticeably longer than GPT-4o within the cloud. That stated, the solutions arrived with none web connection.

Efficiency & limitations

The 20-billion-parameter mannequin is already compressed into 4-bit format. That enables the mannequin to run easily on Macs with 16 GB of RAM for numerous duties.

- Writing and summarizing textual content

- Answering questions

- Producing and debugging code

- Structured perform calling

It is slower than cloud-based GPT-4o for advanced duties however responsive sufficient for many private and improvement work. The bigger 120b mannequin requires 60 to 80 GB of reminiscence, making it sensible just for high-end workstations or analysis environments.

Why run AI regionally?

Native inference retains your knowledge personal, since nothing leaves your machine. It additionally avoids ongoing API or subscription charges and reduces latency by eradicating the necessity for community calls.

As a result of the fashions are launched underneath the Apache 2.0 license, you possibly can fine-tune them for customized workflows. That flexibility helps you to form the AI’s conduct for specialised tasks.

Gpt-oss-20b is a stable selection for those who want an AI mannequin that runs completely in your Mac with out an web connection. It is personal, free to make use of, and reliable as soon as it is arrange. The tradeoff is velocity and polish.

In testing, it took longer to reply than GPT-4 and generally wanted somewhat cleanup on advanced solutions. For informal writing, primary coding, and analysis, it really works superb.

If staying offline issues extra to you than efficiency, gpt-oss-20b is without doubt one of the finest choices you possibly can run right this moment. For quick, extremely correct outcomes, a cloud-based mannequin continues to be the higher match.

Suggestions for one of the best expertise

Use a quantized model of the mannequin to cut back precision from 16-bit floating level to 8-bit or 4-bit integers. Quantizing the mannequin means decreasing its precision from 16-bit floating level to 8-bit or about 4-bit integers.

That cuts reminiscence use dramatically whereas preserving accuracy near the unique. OpenAI’s gpt-oss fashions use a 4-bit format known as MXFP4, which lets the 20b mannequin run on Macs with round 16 GB of RAM.

In case your Mac has lower than 16 GB of RAM, stick with smaller fashions within the 3 to 7 billion parameter vary. Shut memory-intensive apps earlier than beginning a session, and allow MLX or Steel acceleration when out there for higher efficiency.

With the precise setup, your Mac can run AI fashions offline with out subscriptions or web, preserving your knowledge safe. It will not change high-end cloud fashions for each activity, but it surely’s a succesful offline software when privateness and management are necessary.