In at present’s enterprise, having an enormous, unified knowledge lakehouse is crucial for activating knowledge. With a lakehouse, organizations can remodel a passive repository right into a dynamic, clever engine that anticipates wants, automates specialised information, and drives extra knowledgeable choices. At Edmunds, this precedence led to the launch of Edmunds Thoughts, our initiative to construct a classy multi-agent AI ecosystem straight on the Databricks Knowledge Intelligence Platform.

This architectural evolution is fueled by a pivotal second within the automotive {industry}. Three key tendencies have converged:

- The rise of enormous language fashions (LLMs) as highly effective reasoning engines

- The scalability and governance of platforms like Databricks as a safe basis

- The emergence of sturdy agentic frameworks to orchestrate automation. These elements allow methods that may have appeared unimaginable only a few years in the past

This transformation isn’t just about including one other AI device, but additionally about basically redesigning our group to function as an AI-native one. The rules, elements, and techniques behind this clever core are detailed in our architectural blueprint under.

“Databricks offers us a safe, ruled basis to run a number of fashions like GPT-4o, Claude, and Llama and change suppliers as our wants evolve, all whereas conserving prices in verify. That flexibility lets us automate evaluation moderation and enhance content material high quality quicker, so automobile buyers get trusted insights sooner.”—Gregory Rokita, VP of Expertise, Edmunds

Reworking from Knowledge-Wealthy to Insights-Pushed

Our imaginative and prescient is to evolve from a data-rich firm to an insights-driven group. We leverage AI to construct the {industry}’s most trusted, personalised, and predictive automobile purchasing expertise.

That is realized by 4 key strategic pillars:

- Activate Knowledge at Scale: Transition from static dashboards to dynamic, conversational interplay with knowledge.

- Automate Experience: Codify the invaluable logic of our area specialists into reusable, autonomous brokers.

- Speed up Product Innovation: Present our groups with a toolkit of clever brokers to construct next-generation options.

- Optimize Inner Operations: Drive important effectivity beneficial properties by automating advanced inner workflows.

On the coronary heart of this imaginative and prescient is our most vital aggressive benefit: the Edmunds Knowledge Moat. This highly effective basis of automotive knowledge is led by our industry-leading used automobile stock, probably the most complete set of skilled evaluations, and best-in-class pricing intelligence, complemented by intensive client evaluations and new automobile listings. This complete ecosystem is unified and managed inside our Databricks surroundings, making a singular, highly effective asset. Edmunds Thoughts is the engine we have constructed to unlock its full potential.

Contained in the Digital Agent Framework

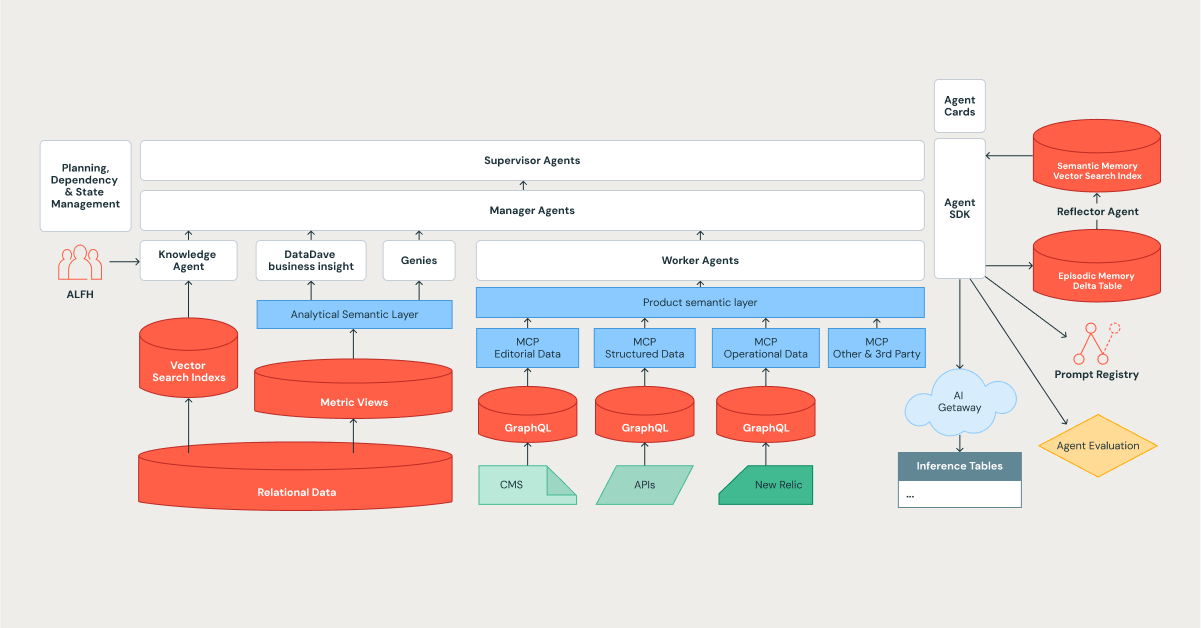

The structure of Edmunds Thoughts is a hierarchical, cognitive system designed for complexity, studying, and scale, with the Databricks Platform serving as its basis.

The Agent Hierarchy: An Group of Digital Specialists

We designed our system to reflect an environment friendly group, utilizing a tiered construction the place duties are decomposed and delegated. This aligns completely with the orchestrator patterns in trendy frameworks, reminiscent of Databricks Agent Bricks.

- Supervisor Brokers: The strategic leaders. They carry out long-term planning, handle dependencies, and orchestrate advanced, multi-stage duties.

- Supervisor Brokers: The staff leads. They coordinate a staff of specialised brokers to perform a selected, well-defined purpose.

- Employee and Specialised Brokers: These are the person contributors who present specialised experience. They’re the system’s workhorses and embrace a rising roster of specialists, such because the Data Assistant, DataDave, and numerous Genies.

Inter-agent communication is ruled by a standardized protocol, making certain that activity delegations and knowledge handoffs are structured, typed, and auditable, which is crucial for sustaining reliability at scale.

The hierarchy can also be designed for swish failure. When a Supervisor Agent determines that its staff of specialists can’t resolve a activity, it escalates your complete activity context again to the Supervisor, together with the failed makes an attempt saved in its episodic reminiscence. The Supervisor can then re-plan with a special technique or, crucially, flag this as a novel drawback that requires human intervention to develop a brand new functionality. This makes the system sturdy and a studying device that helps us determine the boundaries of its competence.

Deep Dive 1: Automated Knowledge Enrichment Workflow

Traditionally, resolving automobile knowledge inaccuracies, reminiscent of incorrect colours on a Car Element Web page, was a labor-intensive course of that required handbook coordination throughout a number of groups. Immediately, the Edmunds Thoughts AI ecosystem automates and resolves these challenges in close to actual time. This operational effectivity is achieved by our centralized Mannequin Serving, which consolidates our various AI agent capabilities right into a single, cohesive surroundings that autoscales primarily based on demand. This structure liberates our groups from operational overhead, permitting them to give attention to delivering worth to our customers quickly.

The decision course of is executed by a ruled, multi-agent workflow. When a consumer or an automatic monitor flags a possible knowledge discrepancy, a Supervisor Agent instantly triages the occasion. It assesses the problem, routes it to the suitable specialised staff, and validates activity permissions by Unity Catalog for sturdy knowledge governance. A devoted Supervisor Agent then orchestrates a sequence of specialised Employee Brokers to carry out duties starting from VIN decoding and picture retrieval to AI-powered colour evaluation and last database updates. Human knowledge stewards stay integral for crucial evaluation, shifting their focus from handbook intervention to the high-value approval stage. Each interplay and determination is systematically logged, constructing a complete basis for steady studying and future course of optimization.

This instance illustrates how the entire ecosystem handles a real-world knowledge high quality and enrichment activity from finish to finish.

- Occasion Set off: A consumer grievance or an automatic monitor flags a possible knowledge high quality situation (e.g., an incorrect automobile colour) on a Car Description Web page.

- Triage and Orchestration: A Supervisor Agent ingests the occasion, creates a trackable activity, and assesses its precedence primarily based on predefined enterprise guidelines.

- Delegation to Supervisor: The Supervisor delegates the duty to the Car Knowledge Supervisor Agent after confirming its permissions to entry and modify automobile knowledge in Unity Catalog.

- Coordinated Activity Execution: The Supervisor Agent orchestrates a sequence of specialised Employee Brokers to resolve the problem: a VIN Decoding Agent, an Picture Retrieval Agent to tug photographs from our media library, an AI-Powered Coloration Evaluation Agent to find out the right colour from the photographs, and a Knowledge Correction Agent to replace the automobile construct database.

- Human-in-the-Loop Evaluation: Earlier than the change goes reside, the Supervisor Agent flags the automated change and notifies a human knowledge steward by way of a Slack integration for last validation.

- Studying and Closure: As soon as the steward approves the duty, the Supervisor marks it as full. All the interplay—together with the ultimate human approval—is traced and logged to Lengthy-Time period Reminiscence for future studying and auditing.

Deep Dive 2: Data Assistant: Actual-Time Solutions, Trusted Model Voice

The place prospects as soon as navigated a number of Edmunds dashboards or contacted Edmunds help for solutions, the Data Assistant now delivers prompt, conversational responses by drawing on the total spectrum of Edmunds’ knowledge. This RAG agent is tuned to the Edmunds model voice, weaving collectively insights from skilled and client evaluations, automobile specs, media, and real-time pricing. Consequently, prospects expertise quicker, extra satisfying interactions, and help employees spend much less time fielding fundamental requests.

Key capabilities embrace:

- Model Voice Personification: The agent is meticulously tuned to speak within the full of life, useful, and trusted voice Edmunds prospects have recognized for many years.

- Actual-Time Knowledge Synthesis: In a single question, the Assistant can retrieve, synthesize, and current data from our disparate, real-time knowledge sources, together with skilled and client evaluations, automobile specs, transcribed video content material, and the most recent pricing and incentives.

- Superior RAG Capabilities: We’re actively working with Databricks utilizing Vector Search to push the boundaries of our RAG implementation. We give attention to enhancing content material recency prioritization and complicated metadata filtering to make sure probably the most related and well timed data is all the time surfaced first.

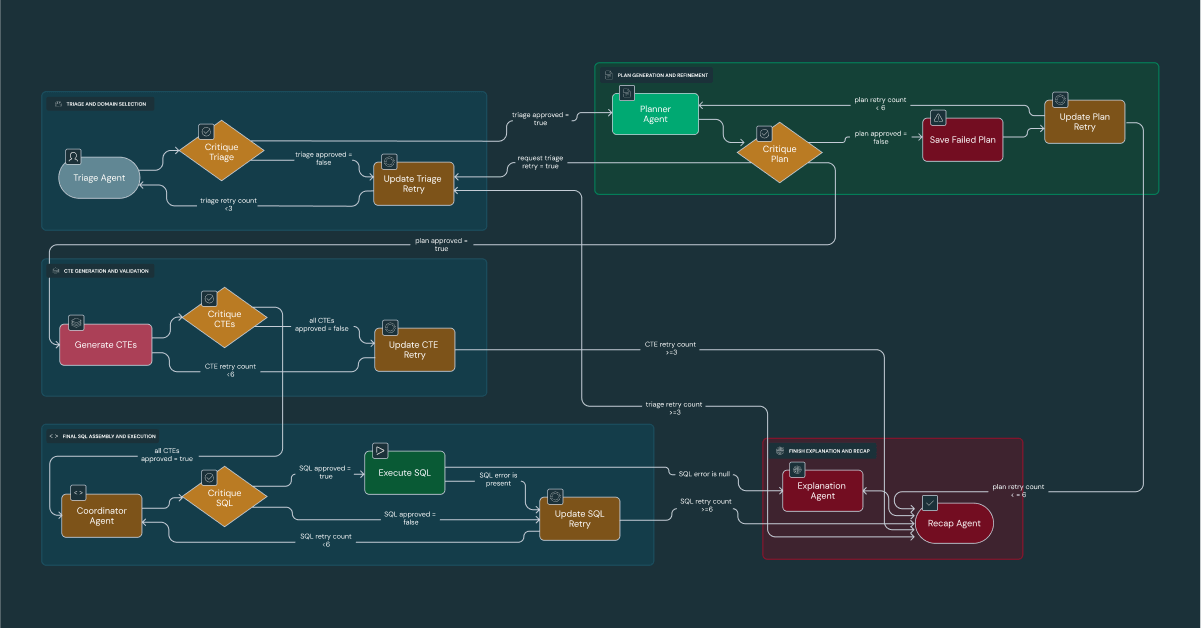

Deep Dive 3: DataDave’s “Generate-and-Critique” Workflow

DataDave now fields advanced analytics that beforehand trusted time-intensive handbook work. This agent orchestrates a rigorous workflow, with every stage critiqued by a specialist agent, to ship 95% accuracy on probably the most difficult queries. DataDave can proactively determine alternatives (reminiscent of flagging underserved dealerships for the Edmunds Gross sales Group) by synthesizing web site visitors and demographic knowledge. This empowers Edmunds’ management to confidently transfer from reporting “what occurred” to deciding “what we must always do subsequent.”

The interior workflow is a five-phase means of Triage, Planning, Code Technology, Execution, and Synthesis, with a devoted Critique agent validating the output of every section. Past merely analyzing inner metrics, DataDave’s true energy lies in its capacity to synthesize our proprietary knowledge with generalized world information to generate strategic suggestions. As an illustration, by correlating Edmunds’ web site visitors knowledge with geographical and demographic knowledge, DataDave can determine dealerships in underserved areas and proactively suggest them to our gross sales staff as “low-hanging fruit.”

Deep Dive 4: Specialization in Pricing

At Edmunds, we function on a core precept: a worth isn’t just a quantity; it is a conclusion that requires context and justification to be trusted. Leveraging our popularity for probably the most correct pricing within the U.S. market, our agent structure is designed to ship this confidence at scale.

Our expertise evolving a monolithic “Pricing Skilled” right into a coordinated staff of specialists demonstrates this precept. This staff—orchestrated by a Supervisor Agent and together with specialists like a True Market Worth Agent, a Depreciation Agent, and a Deal Score Agent—produces greater than only a sticker worth. The ultimate output is a complete, contextualized pricing story that explains why a automobile is valued a sure method.

This transforms the function of our pricing analysts from handbook knowledge aggregation to strategic oversight and steerage. By leveraging Databricks Agent Bricks, our pricing statisticians can configure these hierarchical agent groups with restricted coding, dramatically rising their productiveness and decreasing upkeep overhead. This empowers them to give attention to what really issues: the “why” behind the numbers.

The Cognitive Core: An Structure for Compounding Intelligence

Our journey towards a really clever AI ecosystem started with a sensible problem. Whereas deploying specialist brokers like DataDave for enterprise analytics, we found they had been uncovering crucial, time-sensitive enterprise truths that remained siloed inside their operational context. For instance, an agent may detect an anomalous downtrend in a key advertising channel, however this important perception must be communicated successfully to different entities, each brokers and people, to set off a coordinated response. This highlighted a elementary want: a shared reminiscence system that might seize these emergent learnings and make them accessible as enter to your complete agentic system. We envisioned a cognitive layer the place this data may accumulate, develop, and be leveraged to make our whole ecosystem progressively smarter. Consequently, our newest pondering and design is as follows.

- Episodic Reminiscence (“What Occurred”): A high-fidelity log of each agent motion and statement, serving because the system’s floor fact.

- Semantic Reminiscence (“What Was Realized”): A vector index containing generalized insights and profitable methods synthesized from episodic occasions. This would be the library of actionable information.

- Automated Reminiscence Consolidation: A background “Reflector” agent periodically evaluations episodic reminiscence to determine and consolidate key learnings into semantic reminiscence.

- Hierarchical Reminiscence Entry: Greater-level brokers can entry the recollections of their subordinates, permitting a Supervisor Agent to research staff efficiency and optimize future methods. This suggestions loop is central to our system’s antifragility; each novel failure escalated by the hierarchy isn’t just an issue to be solved, however a sign that trains your complete ecosystem, making it progressively extra clever and resilient.

Implementation: mem0 + Databricks

Our implementation will likely be powered by Databricks Vector Search utilizing a Delta Sync Index, which is totally appropriate with the mem0 interface. Provided that mem0 interacts with vector databases, we are going to innovate by storing each episodic and semantic recollections inside a single, highly effective backend. Uncooked, unsummarized occasions (“what occurred”) and synthesized learnings (“what was realized”) will coexist as distinct vector sorts inside the identical supply Delta desk, which then seamlessly and robotically populates the Vector Search index.

This unified structure creates an environment friendly workflow. The Reflector agent can question the index for current episodic entries, carry out its synthesis, and write the brand new, generalized semantic vectors again into the supply Delta desk. The Delta Sync Index then robotically ingests these new learnings, making them obtainable for querying. By leveraging the supply Delta desk as the one level of entry, we eradicate knowledge pipeline complexity and acquire the scalable, serverless, and low-latency basis required for a really clever agentic system.

Instance Workflow with Edmunds Pulse

- Log: The ‘DataDave’ agent detects a gross sales anomaly and logs the occasion to its Episodic Reminiscence by way of the mem0 API. This motion writes a brand new vector entry into our supply Delta desk.

- Synthesize: The Reflector agent processes this occasion, generates a generalized perception (e.g., “Product X gross sales dip on weekends”), and converts it right into a vector embedding.

- Index: The brand new perception is written again to the supply Delta desk, however flagged as a synthesized studying. Databricks Vector Search robotically syncs this new entry, indexing it into the semantic reminiscence.

- Ship: Lastly, a devoted Edmunds Pulse agent, which continuously displays the semantic reminiscence for high-priority intelligence, proactively delivers this synthesized discovering to a human stakeholder. Drawing a parallel to the ChatGPT Pulse launch, which goals to supply a extra ambient and conscious AI assistant, our Edmunds Pulse will act because the reside ‘pulse’ of the enterprise, making certain crucial insights should not simply saved however actively communicated to drive well timed and clever motion.

The Knowledge and Data Layer: A Ruled Basis of Reality

AI brokers depend on the standard of their knowledge. The Edmunds knowledge layer is purpose-built for consistency, governance, and adaptability, with Unity Catalog serving because the cornerstone to make sure that all data stays correct and well-managed.

Deep Dive 5: GraphQL Knowledge Entry and Interactivity Patterns

The Edmunds Mannequin Context Protocol (MCP) framework securely connects AI brokers to real-time context from all core knowledge sources, reminiscent of automobile specs, evaluations, stock, and operational metrics from methods like New Relic. That is achieved by a unified GraphQL API gateway, which abstracts away the underlying complexity and presents a strongly typed, self-documenting schema.

As an alternative of brokers or engineers combating fragmented knowledge, mismatched schemas, or gradual troubleshooting, the system now helps three major interactivity patterns, every tuned for a special use case:

- Dynamic Schema Introspection: Brokers can dynamically discover new or unfamiliar queries by introspecting the GraphQL schema itself. When a buyer asks a novel query—reminiscent of whether or not a automobile’s worth is affected by current security remembers—the agent can uncover new knowledge sorts on the fly and craft exact queries to fetch related solutions. This flexibility allows the group to shortly adapt to new enterprise necessities with out requiring handbook API modifications.

- Granular Mapped Instruments: Every agent device is mapped on to a selected GraphQL question or mutation for routine operations. For instance, updating a automobile’s colour is so simple as extracting the VIN and new colour, with the agent dealing with the mutation. This method will increase reliability and reduces handbook intervention, streamlining each day staff duties.

- Persistent Queries: Excessive-traffic, performance-critical capabilities, reminiscent of real-time stock dashboards, leverage pre-registered queries for optimum effectivity. The agent sends a light-weight hash and variables, and the system returns outcomes immediately with lowered bandwidth and enhanced safety.

Edmunds has dramatically improved the velocity, flexibility, and reliability of knowledge operations throughout product and help capabilities by giving AI brokers structured entry to all enterprise knowledge by a single, sturdy API layer. Duties that beforehand required customized improvement or cross-team debugging are actually dealt with in real-time, permitting prospects and inner groups to learn from richer insights and extra agile responses.

Deep Dive 6: The Semantic and Data Layers

This significant layer serves because the bridge between uncooked knowledge and agent comprehension. It abstracts away the complexity of underlying knowledge shops. It enriches the information with enterprise context, making certain brokers function on a constant, ruled, and comprehensible view of the Edmunds universe.

- Unity Catalog: The Governance Spine: On the core of our knowledge ecosystem, Unity Catalog supplies centralized governance, safety, and lineage for all knowledge and AI property. It ensures that each piece of knowledge accessed by an agent is topic to fine-grained entry controls and that its journey is totally auditable, forming the non-negotiable basis for a safe and compliant AI platform.

- Product Semantic Layer: Actual-Time Enterprise Context: This layer supplies brokers with a real-time, object-oriented view of our core product entities (e.g., automobiles, sellers, evaluations). Critically, it’s sourced straight from the identical GraphQL schemas that energy the Edmunds web site. This ensures absolute consistency; when an agent discusses a “automobile,” it’s referencing the identical knowledge mannequin and enterprise logic {that a} client sees on the web site, eliminating any danger of knowledge drift between our exterior merchandise and our inner AI.

- Analytical Semantic Layer: The Single Supply of Reality for KPIs: This layer supplies a constant and trusted view of all enterprise efficiency metrics. It’s sourced straight from our curated Delta Metric Views, which is similar supply that feeds all govt and operational dashboards. This alignment ensures that when DataDave or different brokers report on enterprise KPIs (like session visitors, leads, or appraisal charges), they use equivalent definitions and knowledge sources as our established enterprise intelligence instruments, making certain a single supply of fact throughout the group.

- Databricks Vector Search – The Engine for RAG: This part is the high-performance retrieval engine for our unstructured and semi-structured knowledge. By changing our huge corpus of evaluations, articles, and transcribed content material into vector embeddings, we allow brokers just like the Data Assistant to carry out lightning-fast semantic searches, retrieving probably the most related context to reply consumer queries in a Retrieval-Augmented Technology (RAG) sample.

From Price Heart to Worth Engine: Measuring Our AI ROI

A visionary structure is simply nearly as good as its execution. Our method is grounded in a phased roadmap and a deep dedication to treating our AI ecosystem as a core, value-generating engine. We obtain this by straight linking our technical framework for observability, governance, and ethics to key enterprise outcomes. Our purpose is not simply to construct highly effective AI; it is to quantify its impression on our backside line.

Accelerating Enterprise Velocity

We have constructed a holistic system to measure either side of the ROI equation. On the return facet, our framework connects AI efficiency on to enterprise KPIs. For instance:

- Our DataDave agent delivers advanced, actionable analytics in minutes, a activity that beforehand took human Edmunds analysts hours to finish. This dramatically accelerates data-driven decision-making.

- Our pricing brokers reply immediately to inquiries, eliminating hours of handbook analysis and releasing up our groups to give attention to strategic, high-value work.

Whereas we’re nonetheless quantifying the exact impression on metrics like marketing campaign conversion charges, this framework supplies the real-time knowledge wanted to attract these correlations.

Optimizing for Price

We follow good financial governance by our AI Gateway. Excessive-stakes brokers like DataDave are routed to our strongest fashions to make sure accuracy, whereas routine duties are robotically assigned to cheaper fashions. This mannequin tiering technique permits us to exactly handle our LLM and compute spend, making certain each greenback invested is aligned with the enterprise worth it creates.

“Databricks lets us run the best mannequin for the best activity–securely and at scale. That flexibility powers our brokers and delivers smarter automobile purchasing experiences.” — Greg Rokita, VP of Expertise, Edmunds

Organizational Enablement: Empowering Each Worker

To carry this imaginative and prescient to life, we’re fostering a tradition of innovation throughout Edmunds. We purpose to help a full spectrum of human-AI interplay, from totally autonomous duties to human-in-the-loop evaluations and totally collaborative problem-solving.

To help this, we offer a strong Agent SDK for engineers and champion a “Citizen Developer” motion by our Agent Bricks platform. This initiative was kicked off with our company-wide “AI Brokers @ Edmunds” tech convention and is nurtured by an lively LLM Brokers Guild, making certain that each worker has the instruments and help to contribute to our AI-driven future.

The Street Forward: From Proactive Intelligence to True Autonomy

Our journey to changing into a really AI-native group is a marathon, not a dash. The “Edmunds Thoughts” structure serves as our blueprint for that journey, and its subsequent evolutionary step is to develop proactive brokers that not solely reply questions but additionally anticipate enterprise wants. We envision a future the place our brokers determine market alternatives from real-time knowledge streams and ship strategic insights to stakeholders earlier than they even ask.

Finally, our roadmap results in a system the place brokers can self-optimize—proposing new instruments, refining critique mechanisms, and even suggesting architectural enhancements. This marks a transition from a system we merely function to a real cognitive associate, evolving our roles from operators to the overseers, ethicists, and strategists of a brand new, clever workforce.

Be taught extra about how Edmunds is constructing an AI-driven automobile shopping for expertise with the assistance of Databricks.