web optimization is an ever-elusive acronym, however what’s search engine optimisation? In reality, it’s in all probability the case that nobody actually understands how serps consider web content material. It’s a continually evolving sport and the principles are regularly being redefined, particularly in our new AI-dominated search world.

Even these at Google generally battle to grasp how their algorithms index a bit of content material, particularly when there are greater than 200 search rating components. web optimization brings with it new concepts, new data and new ideas. Google’s crawl bots determine what content material to point out for what search question, it’s only a matter of understanding the language used to speak this content material throughout the web. We are able to talk about technical ideas like search engine indexes, no observe hyperlinks, mobile-first indexing, sitemap recordsdata and server-side rendering all we like – all of them have an element to play within the success of a website.

Nonetheless, this detailed information serves to concentrate on the important thing parts of crawlability and indexability, and the position that they every play within the technical well being of your website.

What’s Crawlability?

Earlier than Google can index a bit of content material, it should first be given entry to it so {that a} search engine crawler (or spider) – the crawl bots that scan content material on a webpage – can decide its place within the search engine outcomes pages (SERPs). If Google’s algorithms can not discover your content material, it can not record it.

Take into consideration a time earlier than the web. Companies used to promote and promote their companies in assets just like the Yellow Pages, far faraway from what our present neighborhood does – going straight to that Google search bar. Beforehand, an individual may select to record their telephone quantity for others to search out, or select to not record a quantity and stay unknown.

It’s the identical idea when executing a Google search. Your net web page (whether or not that’s a weblog put up or in any other case) should supply permission to crawlers so it may be saved within the search engine indexes.

Why is Crawlability Necessary?

Crawlability is the inspiration of your total web optimization technique. With out it, any type of top-notch content material merely gained’t seem in Google search outcomes, no matter its high quality or relevance. Search engine bots want to have the ability to uncover and navigate your web site effectively to grasp what your pages are about.

Poor crawlability results in:

- Incomplete indexing of your web site

- Decrease natural visitors

- Related content material failing to realize the traction it deserves

- Diminished visibility in search outcomes

Even essentially the most precious content material gives zero worth if serps can’t discover it within the first place. That is why it’s vital to deal with crawlability points each time they crop up.

What Is Indexability?

Whereas crawlability refers to serps’ skill to entry and crawl your net pages, indexability determines whether or not these pages could be added to a search engine’s indexes.

Consider it this fashion: crawlability is about search engine bots with the ability to uncover your content material, whereas indexability is about whether or not these pages are eligible to look in search outcomes after being found.

A web page could be crawlable however not indexable as a result of components like:

- A “noindex” tag within the HTML

- Canonical tags pointing to different pages

- Low-quality or duplicate content material that serps select to not index

- Blocking the web page in robots.txt (which impacts each crawlability and indexability)

Robots.txt Recordsdata: How Do They Work?

The web makes use of a textual content file known as robots.txt. It’s the usual that crawlers dwell by, and it outlines the permissions a crawler has on a webpage (i.e., what they’ll and can’t scan). Robots.txt is part of the Robots Exclusion Protocol (REP), which is a gaggle of net requirements that regulate how robotic crawlers can entry the web.

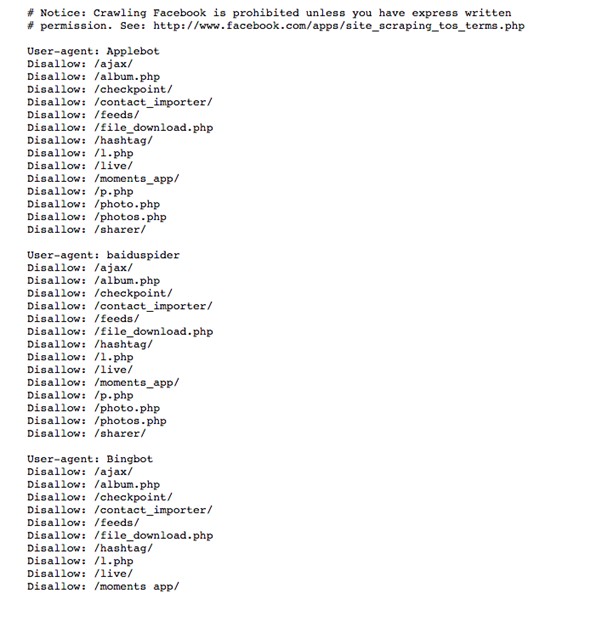

Need an instance? Sort an internet site URL into your search browser and embrace ‘/robots.txt’ on the finish. You must end up with a textual content file that outlines the permissions {that a} crawler has on an internet site. For instance, right here is Fb’s robots.txt file:

So what we see right here is {that a} Bingbot (a crawler utilized by Bing.com) can not entry any URL that may have ‘/picture.php’. Which means that Bing can not index on its SERPs any customers’ Fb photographs except these photographs exist outdoors of the ‘/picture.php’ subfolder.

By understanding robots.txt recordsdata, you may start to grasp the primary stage a crawler (or spider or Googlebot, it’s all the identical factor) goes by to index your web site. So, right here’s an train for you:

Go to your web site and search your robots.txt file and turn into acquainted with what you do and don’t enable crawlers to do. Right here’s some terminology so you may observe alongside:

Person-agent: The precise net crawler to which you’re giving crawl directions (normally a search engine).

- Disallow: The command used to inform a user-agent to not crawl a selected URL.

- Enable (Solely relevant for Googlebot. Different serps have completely different variations of bots and consequently, completely different instructions): The command to inform Googlebot it could actually entry a web page or subfolder regardless that its dad or mum web page or subfolder could also be disallowed.

- Crawl-delay: What number of milliseconds ought to a crawler wait earlier than loading and crawling web page content material? Observe that Googlebot doesn’t acknowledge this command.

- Sitemap: Used to name out the situation of any XML sitemaps related to this URL.

How Do Crawlability and Indexability Have an effect on web optimization?

Crawlability and indexability type the inspiration of your web optimization efforts. They operate as a roadmap for serps, figuring out how they uncover, interpret, and rank your content material for related search queries.

When these parts work collectively successfully:

- Engines like google can simply discover and perceive your content material

- Your pages seem in search outcomes for related queries

- Customers can uncover your web site by natural search

- Your technical web optimization well being improves, supporting your broader digital advertising technique

When both crawlability or indexability points exist, your website’s visibility suffers no matter your content material high quality or different optimisation efforts.

What Do Search Engine Crawlers Look For?

A crawler is searching for particular technical components on an internet web page to find out what the content material is about and the way precious that content material is. When a crawler enters a website, the very first thing it does is learn the robots.txt file to grasp its permissions. As soon as it has permission to crawl an internet web page, it then seems at:

- HTTP headers (Hypertext Switch Protocol): HTTP headers particularly take a look at details about the viewer’s browser, the requested web page, the server and extra. Standing codes like 200 (success) or 404 (web page not discovered) inform crawlers about web page availability.

- Meta tags: These are snippets of textual content that describe what an internet web page is about, very similar to the synopsis of the ebook. Meta descriptions and title tags assist crawlers perceive web page content material.

- Web page titles: H1 and H2 tags are learn earlier than physique copy is. Crawlers will get a way of what content material is by studying these subsequent, making a logical content material hierarchy.

- Photographs: Photographs include alt-text, which is a brief descriptor telling crawlers what the picture is and the way it pertains to the content material—essential for each web optimization and accessibility for customers.

- Physique textual content: After all, crawlers will learn your physique copy to assist it perceive what an internet web page is all about, analysing key phrase utilization and subject relevance.

- Inner hyperlinks: How pages join to at least one one other helps crawlers perceive your website construction and content material relationships.

With this info, a crawler can construct an image about what a bit of content material is saying and the way precious it’s to an actual human studying it.

What Impacts Crawlability and Indexability?

A number of technical components can impression your website’s crawlability and indexability:

XML Sitemap

An XML sitemap serves as a roadmap for search engine bots, itemizing all vital pages in your web site that ought to be crawled. A well-structured sitemap helps serps uncover new and up to date content material extra effectively.

Google Search Console and Bing Webmaster Instruments assist you to submit your sitemap URL immediately, signalling to serps which pages are most vital. John Mueller of Google has repeatedly emphasised the significance of sitemaps, particularly for big or complicated web sites.

Inner Linking Construction

Your inside linking construction helps serps perceive your website hierarchy and content material relationships. Contextual hyperlinks between associated pages assist crawlers uncover content material and perceive subject relevance.

Poor website construction with orphaned pages (no inside hyperlinks pointing to them) or deep pages (requiring many clicks from the homepage) can hinder crawlability. A logical URL construction and website structure enhance each bot crawling and consumer expertise.

Technical Points

Varied technical web optimization points can impression crawlability and indexability:

- Server errors: 5xx server errors stop crawlers from accessing content material

- 4xx errors: Pages returning 4xx standing codes (resembling 404 “not discovered” errors) waste crawl funds and create useless ends

- Redirect loops: Round redirects confuse crawlers and forestall web page indexing

- Duplicate content material: A number of variations of the identical content material confuse serps

- Sluggish loading instances: A web page with a poor load time could also be crawled much less often

- Cellular optimisation points: With mobile-first indexing, non-mobile-friendly pages could endure

Content material High quality

Engines like google more and more consider content material primarily based on its worth to human customers:

- Relevance to look intent: Content material that addresses what customers are literally looking for

- Excessive-quality content material: Complete, correct, and well-structured info

- Redundant or outdated content material: Pages with little distinctive worth could also be de-prioritised

- Skinny content material: Pages with minimal substantive info could also be excluded from indexing

Discover Crawlability and Indexability Points

Figuring out potential crawlability and indexability issues requires devoted technical web optimization instruments:

Google Search Console

Google Search Console (GSC) gives invaluable insights into how Google views your website:

- The URL Inspection device reveals the present index standing of particular pages

- Protection reviews determine pages with errors stopping indexing

- The “Request Indexing” characteristic permits you to ask Google to recrawl particular URLs

- Core Net Vitals reviews spotlight efficiency points affecting consumer expertise

web optimization Instruments

Skilled web optimization audit instruments can determine a broader vary of points:

- Log file analysers show you how to see precisely how bots are crawling your website

- Crawl error reviews determine damaged hyperlinks and redirect issues

- Web site construction visualisations reveal potential navigation points

- Technical web optimization audit reviews present recommendations for enchancment

Guide Checks

Some fundamental checks you may carry out your self:

- Use the “website:yourdomain.com” search operator to see which pages Google has listed

- Test your robots.txt for unintentional blocking directives

- Evaluate your website’s cell variations to make sure correct mobile-first indexing

- Analyse your inside linking to determine orphaned pages

Enhance Crawlability and Indexability

Enhancing your website’s crawlability and indexability requires a scientific method:

Optimise Your Technical Basis

- Repair damaged hyperlinks and redirect chains that waste crawl funds

- Enhance web page pace with actionable web page pace enhancements

- Implement a logical website construction with intuitive navigation

- Create an XML sitemap and submit it to serps

- Optimise robots.txt to make sure vital content material is accessible

- Tackle cell optimisation points with responsive designs

Improve Content material High quality

- Audit present content material for relevance and comprehensiveness

- Take away or enhance skinny content material pages

- Consolidate redundant content material to forestall cannibalisation

- Create contextual inside hyperlinks between associated pages

- Guarantee your goal touchdown pages are simply accessible

Monitor and Preserve

- Usually examine Google Search Console for brand spanking new indexing points

- Monitor your indexability fee over time

- Monitor crawl stats to make sure environment friendly bot visitors

- Use Google Analytics to determine potential content material high quality points by metrics like bounce fee and dwell instances

- Keep up to date on technical web optimization finest practices by the net neighborhood for builders

However Right here’s the Factor…

There are greater than 200 rating components {that a} crawler will take into account. It’s an advanced course of, however as long as your technical checks are in place, you might have an important probability of acquiring excessive search engine rankings. Backlinks, for instance, are extraordinarily vital to find out how authoritative a bit of content material is, as is the general area authority.

web optimization is all about making certain your content material has the right technical checks in place. It’s about ensuring you give a crawler permission within the robots.txt recordsdata, that the crawler can simply perceive your meta tags, that your web page headings are clear sufficient and relate to the physique copy, and that what you present your readers is efficacious and price studying.

And this final level is kind of presumably crucial: worth is every little thing. As a result of let’s face it, if an algorithm isn’t going to learn your content material, a human definitely gained’t. The most effective web optimization technique focuses on creating precious content material for human customers first, then making certain search engine bots can correctly uncover and perceive it.

By addressing each crawlability and indexability points, you create the technical basis mandatory to your content material to achieve natural search, whether or not driving focused visitors to product pages on eCommerce websites or key value-driven, evergreen touchdown pages that assist your broader digital advertising targets. The salient level is that technical foundations are key to serving to your content material rank higher – you may’t have one with out the opposite.