Researchers from Anthropic investigated Claude 3.5 Haiku’s capability to resolve when to interrupt a line of textual content inside a hard and fast width, a activity that requires the mannequin to trace its place because it writes. The research yielded the stunning consequence that language fashions type inner patterns resembling the spatial consciousness that people use to trace location in bodily area.

Andreas Volpini tweeted about this paper and made an analogy to chunking content material for AI consumption. In a broader sense, his remark works as a metaphor for the way each writers and fashions navigate construction, discovering coherence on the boundaries the place one section ends and one other begins.

This analysis paper, nonetheless, shouldn’t be about studying content material however about producing textual content and figuring out the place to insert a line break so as to match the textual content into an arbitrary fastened width. The aim of doing that was to higher perceive what’s occurring inside an LLM because it retains observe of textual content place, phrase alternative, and line break boundaries whereas writing.

The researchers created an experimental activity of producing textual content with a line break at a selected width. The aim was to know how Claude 3.5 Haiku decides on phrases to suit inside a specified width and when to insert a line break, which required the mannequin to trace the present place throughout the line of textual content it’s producing.

The experiment demonstrates how language fashions study construction from patterns in textual content with out specific programming or supervision.

The Linebreaking Problem

The linebreaking activity requires the mannequin to resolve whether or not the following phrase will match on the present line or if it should begin a brand new one. To succeed, the mannequin should study the road width constraint (the rule that limits what number of characters can match on a line, like in bodily area on a sheet of paper). To do that the LLM should observe the variety of characters written, compute what number of stay, and resolve whether or not the following phrase matches. The duty calls for reasoning, reminiscence, and planning. The researchers used attribution graphs to visualise how the mannequin coordinates these calculations, exhibiting distinct inner options for the character depend, the following phrase, and the second a line break is required.

Steady Counting

The researchers noticed that Claude 3.5 Haiku represents line character counts not as counting step-by-step, however as a easy geometric construction that behaves like a constantly curved floor, permitting the mannequin to trace place fluidly (on the fly) reasonably than counting image by image.

One thing else that’s attention-grabbing is that they found the LLM had developed a boundary head (an “consideration head”) that’s chargeable for detecting the road boundary. An consideration mechanism weighs the significance of what’s being thought of (tokens). An consideration head is a specialised element of the eye mechanism of an LLM. The boundary head, which is an consideration head, specializes within the slim activity of detecting the tip of line boundary.

The analysis paper states:

“One important characteristic of the illustration of line character counts is that the “boundary head” twists the illustration, enabling every depend to pair with a depend barely bigger, indicating that the boundary is shut. That’s, there’s a linear map QK which slides the character depend curve alongside itself. Such an motion shouldn’t be admitted by generic high-curvature embeddings of the circle or the interval like those within the bodily mannequin we constructed. However it’s current in each the manifold we observe in Haiku and, as we now present, within the Fourier building. “

How Boundary Sensing Works

The researchers discovered that Claude 3.5 Haiku is aware of when a line of textual content is nearly reaching the tip by evaluating two inner indicators:

- What number of characters it has already generated, and

- How lengthy the road is meant to be.

The aforementioned boundary consideration heads resolve which components of the textual content to concentrate on. A few of these heads concentrate on recognizing when the road is about to succeed in its restrict. They do that by barely rotating or lining up the 2 inner indicators (the character depend and the utmost line width) in order that once they practically match, the mannequin’s consideration shifts towards inserting a line break.

The researchers clarify:

“To detect an approaching line boundary, the mannequin should evaluate two portions: the present character depend and the road width. We discover consideration heads whose QK matrix rotates one counting manifold to align it with the opposite at a selected offset, creating a big interior product when the distinction of the counts falls inside a goal vary. A number of heads with totally different offsets work collectively to exactly estimate the characters remaining. “

Ultimate Stage

At this stage of the experiment, the mannequin has already decided how shut it’s to the road’s boundary and the way lengthy the following phrase shall be. The final step is use that info.

Right here’s the way it’s defined:

“The ultimate step of the linebreak activity is to mix the estimate of the road boundary with the prediction of the following phrase to find out whether or not the following phrase will match on the road, or if the road must be damaged.”

The researchers discovered that sure inner options within the mannequin activate when the following phrase would trigger the road to exceed its restrict, successfully serving as boundary detectors. When that occurs, the mannequin raises the prospect of predicting a newline image and lowers the prospect of predicting one other phrase. Different options do the other: they activate when the phrase nonetheless matches, reducing the prospect of inserting a line break.

Collectively, these two forces, one pushing for a line break and one holding it again, steadiness out to make the choice.

Mannequin’s Can Have Visible Illusions?

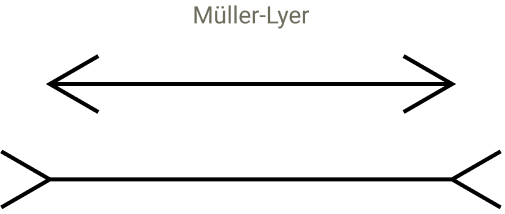

The following a part of the analysis is sort of unbelievable as a result of they endeavored to check whether or not the mannequin may very well be inclined to visible illusions that may trigger journey it up. They began with the concept of how people may be tricked by visible illusions that current a false perspective that make strains of the identical size seem like totally different lengths, one shorter than the opposite.

Screenshot Of A Visible Phantasm

The researchers inserted synthetic tokens, resembling “@@,” to see how they disrupted the mannequin’s sense of place. These checks precipitated misalignments within the mannequin’s inner patterns it makes use of to maintain observe of place, just like visible illusions that trick human notion. This precipitated the mannequin’s sense of line boundaries to shift, exhibiting that its notion of construction will depend on context and discovered patterns. Although LLMs don’t see, they expertise distortions of their inner group just like how people misjudge what they see by disrupting the related consideration heads.

They defined:

“We discover that it does modulate the anticipated subsequent token, disrupting the newline prediction! As predicted, the related heads get distracted: whereas with the unique immediate, the heads attend from newline to newline, within the altered immediate, the heads additionally attend to the @@.”

They questioned if there was one thing particular in regards to the @@ characters or would another random characters disrupt the mannequin’s capability to efficiently full the duty. So that they ran a take a look at with 180 totally different sequences and located that the majority of them didn’t disrupt the fashions capability to foretell the road break level. They found that solely a small group of characters that had been code associated had been capable of distract the related consideration heads and disrupt the counting course of.

LLMs Have Visible-Like Notion For Textual content

The research reveals how text-based options evolve into easy geometric methods inside a language mannequin. It additionally reveals that fashions don’t solely course of symbols, they create perception-based maps from them. This half, about notion, is to me what’s actually attention-grabbing in regards to the analysis. They preserve circling again to analogies associated to human notion and the way these analogies preserve becoming into what they see occurring contained in the LLM.

They write:

“Though we typically describe the early layers of language fashions as chargeable for “detokenizing” the enter, it’s maybe extra evocative to think about this as notion. The start of the mannequin is actually chargeable for seeing the enter, and far of the early circuitry is in service of sensing or perceiving the textual content just like how early layers in imaginative and prescient fashions implement low stage notion.”

Then somewhat later they write:

“The geometric and algorithmic patterns we observe have suggestive parallels to notion in organic neural methods. …These options exhibit dilation—representing more and more giant character counts activating over more and more giant ranges—mirroring the dilation of quantity representations in organic brains. Furthermore, the group of the options on a low dimensional manifold is an occasion of a standard motif in organic cognition. Whereas the analogies are usually not excellent, we suspect that there’s nonetheless fruitful conceptual overlap from elevated collaboration between neuroscience and interpretability.”

Implications For search engine optimization?

Arthur C. Clarke wrote that superior know-how is indistinguishable from magic. I feel that when you perceive a know-how it turns into extra relatable and fewer like magic. Not all data has a utilitarian use and I feel understanding how an LLM perceives content material is beneficial to the extent that it’s not magical. Will this analysis make you a greater search engine optimization? It deepens our understanding of how language fashions set up and interpret content material construction, makes it extra comprehensible and fewer like magic.

Learn in regards to the analysis right here:

When Fashions Manipulate Manifolds: The Geometry of a Counting Job

Featured Picture by Shutterstock/Krot_Studio