SQL is the language of information; nevertheless, anybody who has spent a while writing queries is aware of the ache. Remembering actual syntax for window features, multi-table joins, and debugging cryptic SQL errors may be tedious and time-consuming. For non-technical customers, getting easy solutions usually requires calling in an information analyst. Giant Language Fashions (LLMs) are beginning to change this example. Appearing as copilots, LLMs can take human directions and convert them into SQL queries, clarify advanced SQL queries to people, and recommend optimizations for faster computations. The outcomes are clear: quicker iterations, decrease limitations for non-technical customers, and fewer time wasted wanting into syntax.

Why LLMs Make Sense for SQL

LLMs excel at mapping pure languages into structured texts. SQL is actually structured textual content with well-defined patterns. Asking an LLM “Discover the highest 5 promoting merchandise final quarter,” and it might draft a question utilizing GROUP BY (for varied channels), ORDER BY, and LIMIT (to get high 5) clauses.

On high of drafting queries, LLMs can act as helpful debugging companions. If a question fails, it might summarize the error, spot the faults in your enter SQL, and suggest completely different options to repair it. They will additionally recommend extra environment friendly options to scale back computation time and enhance effectivity. They will additionally translate SQL points into plain English for higher understanding.

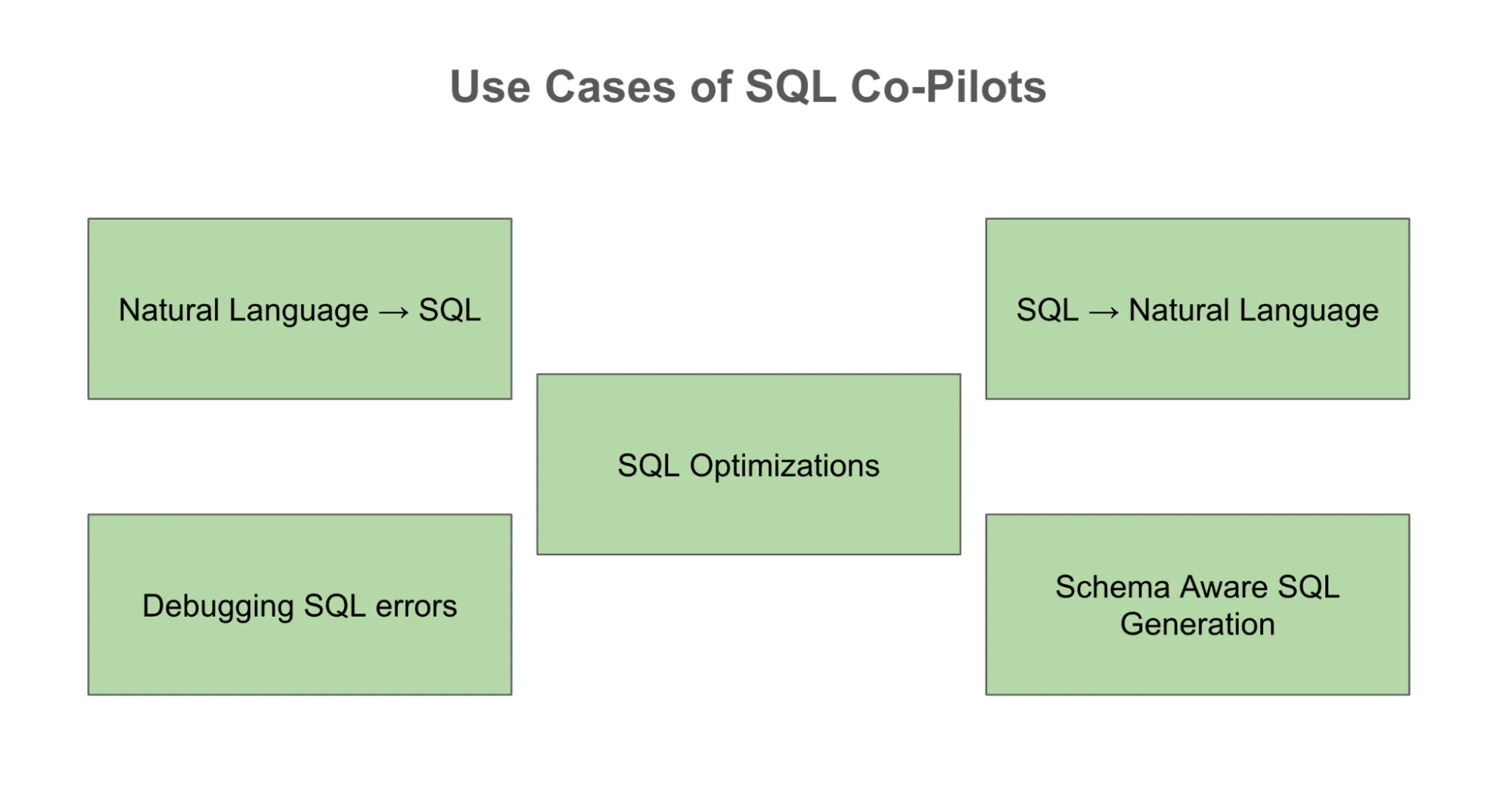

On a regular basis Use Circumstances

The obvious use case is pure language to SQL, which permits anybody to precise a enterprise want and obtain a draft question. However there are many others. An analyst can paste an error code, and LLM might help debug the error. The identical analyst can share the learnings on the right prompts used to debug the error precisely and share them with fellow group members to avoid wasting time. Newcomers can lean on the copilot to translate SQL into pure language. With the right schema context, LLMs can generate queries tailor-made to the group’s precise database buildings, making them far more highly effective than generic syntax turbines.

Learn extra: Pure Language to SQL Functions

Copilot, Not Autopilot

Regardless of all their promise, LLMs even have some recognized limitations. Essentially the most outstanding ones are column hallucination and producing random desk names when not supplied. With out a appropriate schema context, it’s probably that LLM would resort to assumptions and get it unsuitable. The Queries generated by LLMs could execute, however they can’t be environment friendly, resulting in elevated prices and slower execution occasions. Along with all of those points, there may be an apparent safety threat as delicate inside schemas could be shared with exterior APIs.

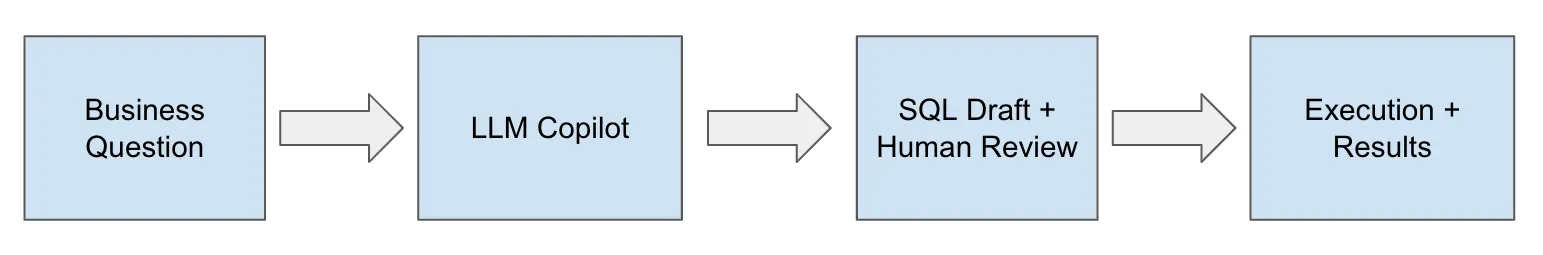

The conclusion may be very simple: LLMs needs to be handled as copilots moderately than relying on them utterly. They might help draft and speed up work, however human intervention will likely be wanted for validations earlier than executions.

Enhancing LLM Outcomes by way of Immediate Engineering

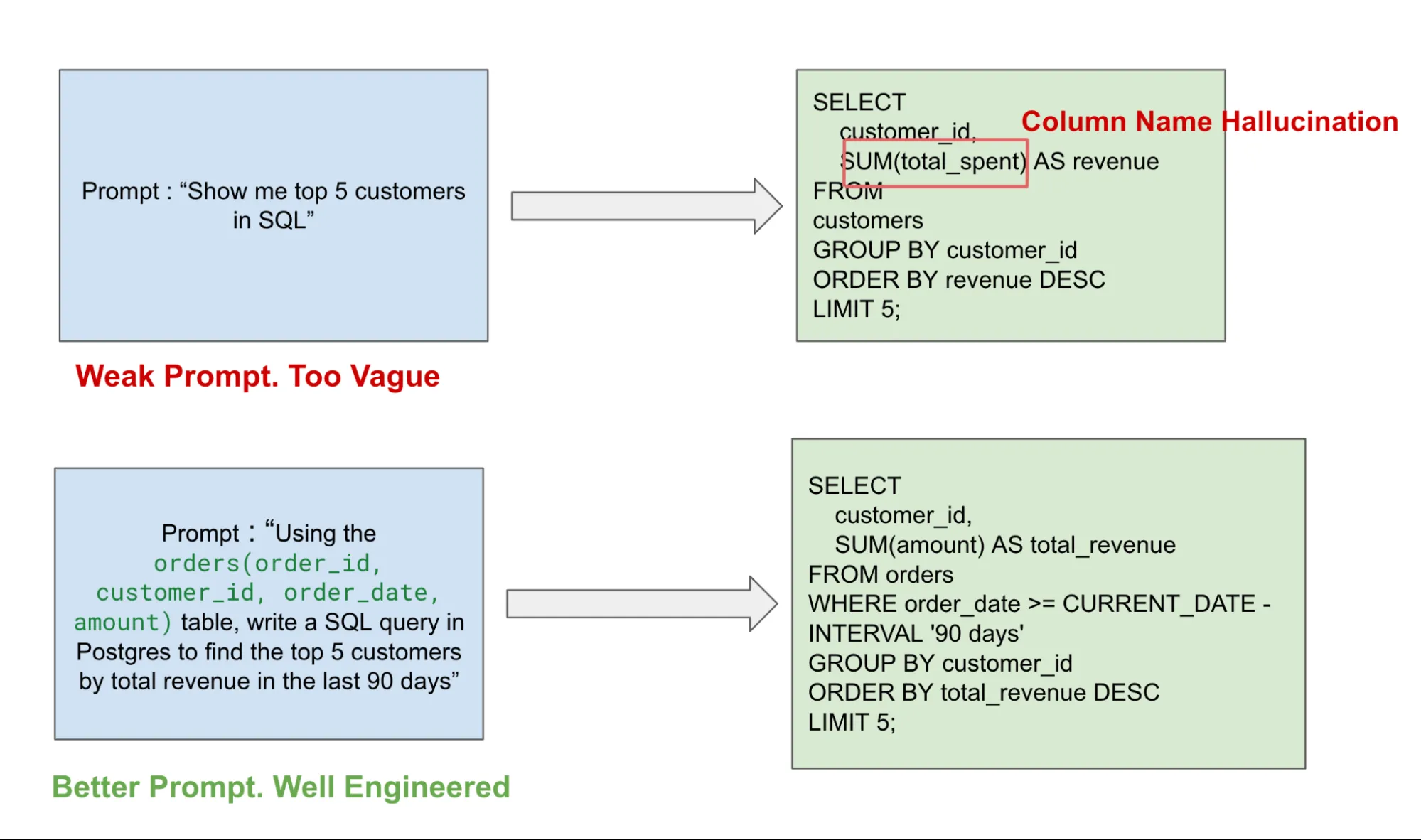

Immediate engineering is among the most vital abilities to study to make use of LLMs successfully. For SQL copilots, prompting is a key lever as imprecise prompts can usually result in incomplete, unsuitable, and generally mindless queries. With appropriate schema context, desk column data, and outline, the standard of the output question can enhance dramatically.

Together with information schema data, SQL dialect additionally issues. All SQL dialects like Postgres, BigQuery, and Presto have small variations, and mentioning the SQL dialect to the LLM will assist keep away from syntax mismatches. Being detailed about output additionally issues, for eg: Specify date vary, high N customers, and so on, to keep away from incorrect outcomes and pointless information scans (which may result in costly queries).

In my expertise, for advanced queries, iterative prompting works the most effective. Asking the LLM to construct a easy question construction first after which refining it step-by-step works the most effective. You too can use the LLM to elucidate its logic earlier than providing you with the ultimate SQL. That is helpful for debugging and educating the LLM to deal with the appropriate matters. You should utilize Few-shot prompting, the place you present the LLM an instance question earlier than asking it to generate a brand new one, in order that it has extra context. Lastly, error-driven prompting helps the tip consumer debug the error message and get a repair. These prompting methods are what make the distinction between queries which can be “virtually appropriate” and those that truly run.

You may see this within the instance under, the place a imprecise immediate results in column identify hallucination. In comparison with a well-engineered and extra detailed immediate, you get a well-defined question matching the required SQL dialect with none hallucination.

Greatest Practices for LLMs as SQL copilots

There are some finest practices that one can observe whereas utilizing a SQL Copilot. It’s at all times most well-liked to manually assessment the question earlier than working, particularly in a manufacturing surroundings. It is best to deal with LLM outputs as drafts moderately than the precise output. Secondly, integration is vital, as a Copilot built-in with the group’s current IDE, Notebooks, and so on., will make them extra usable and efficient.

Guardrails and Dangers

SQL Copilots can carry big productiveness positive factors, however there are some dangers we must always think about earlier than rolling them out organization-wide. Firstly, the priority is round over-reliance; Copilots can result in Knowledge Analysts relying closely on it and by no means constructing core SQL data. This could result in potential abilities gaps the place groups can create SQL prompts however can’t troubleshoot them.

One other concern is across the governance of information. We want to ensure copilots don’t share delicate information with customers with out appropriate permissions, stopping immediate injection assaults. Organizations have to construct the right information governance layer to stop data leakage. Lastly, there are value implications the place Frequent API calls to Copilots can result in prices including up shortly. With out appropriate utilization and token insurance policies, this may trigger price range points.

Analysis Metrics for Copilot Success

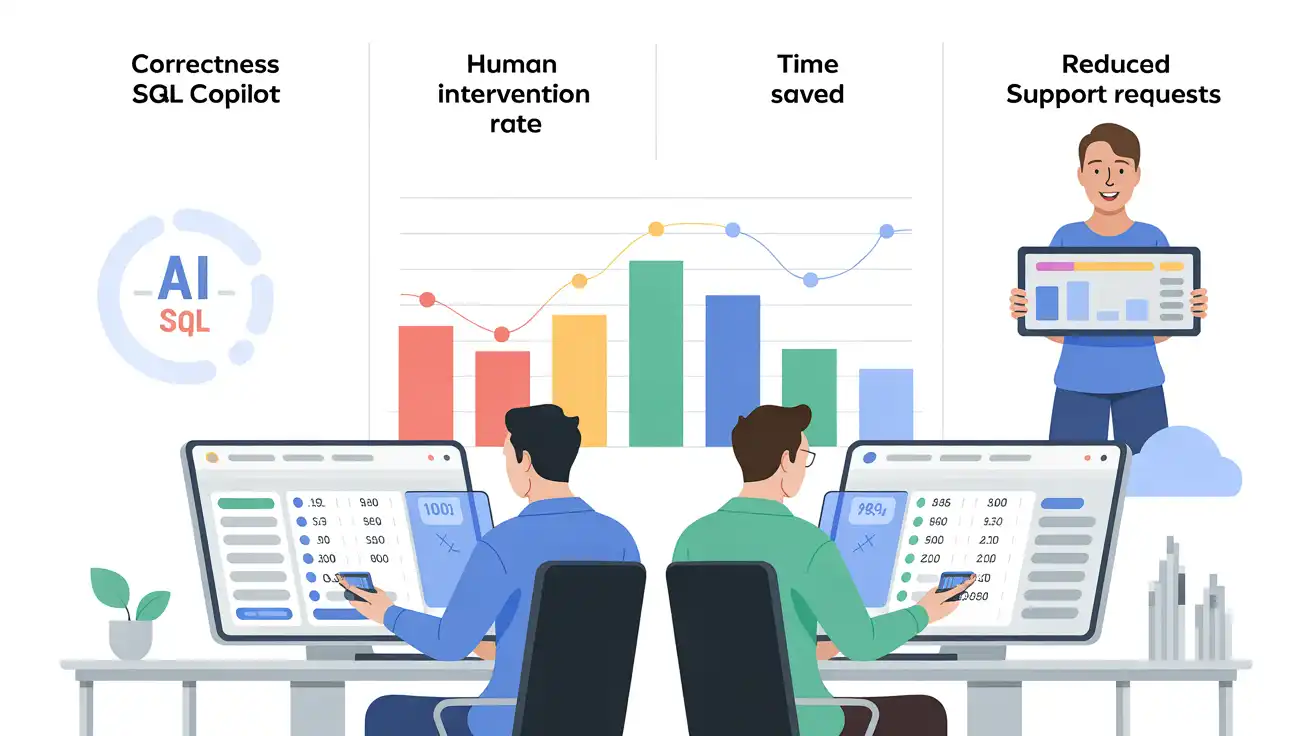

An necessary query whereas investing in LLMs for SQL Copilots is: How have you learnt they’re working? There are a number of dimensions in which you’ll be able to measure the effectiveness of copilots, like correctness, human intervention charge, time saved, and discount in repetitive assist requests. Correctness is a vital metric to assist decide, in circumstances the place SQL Copilot is offering a question that runs with out errors, does it produces the appropriate anticipated end result. This may be achieved by taking a pattern of inputs given to Copilot and having analysts draft the identical question to check outputs. This is not going to solely assist validate Copilot outcomes however may also be used to enhance prompts for extra accuracy. On high of this, this train will even provide the estimated time saved per question, serving to quantify the productiveness increase.

One other easy metric to contemplate is % of generated queries that run with out human edits. If Copilot persistently produces working runnable queries, they’re clearly saving time. A much less apparent however highly effective measure could be a discount in repeated assist requests from non-technical employees. If enterprise groups can self-serve extra of their questions with copilots, information groups can spend much less time answering fundamental SQL requests and focus extra time on high quality insights and strategic route.

The Street Forward

The potential right here may be very thrilling. Think about copilots who might help you with the entire end-to-end course of: Schema-aware SQL technology, Built-in into an information catalog, able to producing dashboards or visualizations. On high of this, copilots can study out of your group’s previous queries to adapt their model and enterprise logic. The way forward for SQL is just not about changing it however eradicating the friction to extend effectivity.

SQL remains to be the spine of the info stack; LLMs, when working as copilots, will make it extra accessible and productive. The hole between asking a query and getting a solution will likely be dramatically diminished. This may release analysts to spend much less time wrangling and googling syntaxes and extra time creating insights. Used correctly with cautious prompting and human oversight, LLMs are poised to turn into a normal a part of the info skilled’s toolkit.

Ceaselessly Requested Questions

A. They flip pure language into SQL, clarify advanced queries, debug errors, and recommend optimizations—serving to each technical and non-technical customers work quicker with information.

A. As a result of LLMs can hallucinate columns or make schema assumptions. Human assessment is crucial to make sure accuracy, effectivity, and information safety.

A. By giving clear schema context, specifying SQL dialects, and refining queries iteratively. Detailed prompts drastically scale back hallucinations and syntax errors.

Login to proceed studying and luxuriate in expert-curated content material.