(Shuttestock AI)

The previous few years have seen AI develop quicker than any expertise in trendy reminiscence. Coaching runs that when operated quietly inside college labs now span huge amenities full of high-performance computer systems, tapping into an online of GPUs and huge volumes of information.

AI primarily runs on three substances: chips, information and electrical energy. Amongst them, electrical energy has been essentially the most tough to scale. We all know that every new technology of fashions is extra highly effective and infrequently claimed to be extra power-efficient on the chip degree, however the whole vitality required retains rising.

Bigger datasets, longer coaching runs and extra parameters drive whole energy use a lot larger than was attainable with earlier methods. The plethora of algorithms has given method to an engineering roadblock. The following section of AI progress will rise or fall on who can safe the facility, not the compute.

On this a part of our Powering Knowledge within the Age of AI sequence, we’ll take a look at how vitality has turn into the defining constraint on computational progress — from the megawatts required to feed coaching clusters to the nuclear initiatives and grid improvements that would help them.

Understanding the Scale of the Vitality Drawback

The Worldwide Vitality Company (IEA) calculated that information facilities worldwide consumed round 415 terawatt hours of electrical energy in 2024. That quantity goes to just about double, to round 945 TWh by 2030, because the calls for of AI workloads proceed to rise. It has grown at 12% per yr over the past 5 years.

Fatih Birol, the chief director of the IEA, known as AI “one of many largest tales in vitality immediately” and stated that demand for electrical energy from information facilities might quickly rival what international locations use all collectively.

“Demand for electrical energy world wide from information centres is heading in the right direction to double over the following 5 years, as info expertise turns into extra pervasive in our lives,” Birol stated in an announcement launched with the IEA’s 2024 Vitality and AI report.

“The affect will likely be particularly robust in some international locations — in america, information centres are projected to account for practically half of the expansion in electrical energy demand; in Japan, over half; and in Malaysia, one-fifth.”

Already, that shift is remodeling the way in which and place energy will get delivered. The tech giants aren’t solely constructing information facilities for proximity or community pace. They’re additionally chasing secure grids, low value electrical energy and house for renewable technology.

In line with Lawrence Berkeley Nationwide Laboratory analysis, information facilities are anticipated to devour roughly 176 terawatt hours of electrical energy simply within the US in 2023, or about 4.4% of the entire nationwide demand. The buildout shouldn’t be slowing down. By the tip of the last decade, new initiatives might drive consumption to nearly 800 TWh, as greater than 80 gigawatts of additional capability is projected to go surfing — offered they’re accomplished in time.

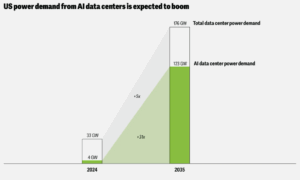

Deloitte initiatives that energy demand from AI information facilities will climb from about 4 gigawatts in 2024 to roughly 123 gigawatts by 2035. Given these initiatives, it’s no nice shock that now energy dictates the place the following cluster will likely be constructed, not fiber routes or tax incentives. In some areas, vitality planners and tech firms are even negotiating straight to make sure a long-term provide. What was as soon as a query of compute and scale has now turn into a difficulty of vitality.

Why AI Techniques Eat So A lot Energy

The reliance on vitality is partly because of the actuality that each one layers of AI infrastructure run on electrical energy. On the core of each AI system is pure computation. The chips that practice and run massive fashions are the most important vitality draw by far, performing billions of mathematical operations each second. Google printed an estimate that a mean Gemini Apps textual content immediate makes use of 0.24 watt‑hours of electrical energy. You multiply that throughout the tens of millions of textual content prompts on a regular basis, and the numbers are staggering.

The GPUs that practice and course of these fashions devour large energy, practically all of which is turned straight into warmth (plus losses in energy conversion). That warmth needs to be dissipated on a regular basis, utilizing cooling methods that devour vitality.

That stability takes loads of nonstop operating of cooling methods, pumps and air handlers. A single rack of contemporary accelerators can devour 30 to 50 kilowatts — a number of occasions what older servers wanted. Vitality transports information, too: high-speed interconnects, storage arrays and voltage conversions all contribute to the burden.

In contrast to older mainframe workloads that spiked and dropped with altering demand, trendy AI methods function near full capability for days and even weeks at a time. This fixed depth locations sustained strain on energy supply and cooling methods, turning vitality effectivity from a easy value consideration into the inspiration of scalable computation.

Energy Drawback Rising Sooner Than the Chips

Each leap in chip efficiency now brings an equal and reverse pressure on the methods that energy it. Every new technology from NVIDIA or AMD raises expectations for pace and effectivity, but the actual story is unfolding exterior the chip — within the information facilities making an attempt to feed them. Racks that when drew 15 or 20 kilowatts now pull 80 or extra, generally reaching 120. Energy distribution models, transformers, and cooling loops all must evolve simply to maintain up.

What was as soon as a query of processor design has turn into an engineering puzzle of scale. The Semiconductor Trade Affiliation’s 2025 State of the Trade report describes this as a “performance-per-watt paradox,” the place effectivity features on the chip degree are being outpaced by whole vitality progress throughout methods. Every enchancment invitations bigger fashions, longer coaching runs, and heavier information motion — erasing the very financial savings these chips have been meant to ship.

To deal with this new demand, operators are shifting from air to liquid cooling, upgrading substations, and negotiating straight with utilities for multi-megawatt connections. The infrastructure constructed for yesterday’s servers is being re-imagined round energy supply, not compute density. As chips develop extra succesful, the bodily world round them — the wires, pumps, and grids — is struggling to catch up.

The New Metric That Guidelines the AI Period: Velocity-to-Energy

Inside the biggest information facilities on the planet, a quiet shift is happening. The previous race for pure pace has given method to one thing extra basic — how a lot efficiency may be extracted per unit of energy. This steadiness, generally known as the speed-to-power tradeoff, has turn into the defining equation of contemporary AI.

It’s not a benchmark like FLOPS, however it now influences practically each design choice. Chipmakers promote efficiency per watt as their most vital aggressive edge, as a result of pace doesn’t matter if the grid can’t deal with it. NVIDIA’s upcoming H200 GPU, as an illustration, delivers about 1.4 occasions the performance-per-watt of the H100, whereas AMD’s MI300 household focuses closely on effectivity for large-scale coaching clusters. Nonetheless, as chips get extra superior, so does the demand for extra vitality.

That dynamic can also be reshaping the economics of AI. Cloud suppliers are beginning to cost for workloads based mostly not simply on runtime however on the facility they draw, forcing builders to optimize for vitality throughput slightly than latency. Knowledge heart architects now design round megawatt budgets as an alternative of sq. footage, whereas governments from the U.S. to Japan are issuing new guidelines for energy-efficient AI methods.

That dynamic can also be reshaping the economics of AI. Cloud suppliers are beginning to cost for workloads based mostly not simply on runtime however on the facility they draw, forcing builders to optimize for vitality throughput slightly than latency. Knowledge heart architects now design round megawatt budgets as an alternative of sq. footage, whereas governments from the U.S. to Japan are issuing new guidelines for energy-efficient AI methods.

It might by no means seem on a spec sheet, however speed-to-power quietly defines who can construct at scale. When one mannequin can devour as a lot electrical energy as a small metropolis, effectivity issues — and it’s displaying in how your entire ecosystem is reorganizing round it.

The Race for AI Supremacy

As vitality turns into the brand new epicenter of computational benefit, governments and firms that may produce dependable energy at scale will pull forward not solely in AI however throughout the broader digital financial system. Analysts describe this because the rise of a “strategic electrical energy benefit.” The idea is each simple and far-reaching: as AI workloads surge, the international locations capable of ship ample, low-cost vitality will lead the following wave of commercial and technological progress.

With out quicker funding in nuclear energy and grid growth, the US might face reliability dangers by the early 2030s. That’s why the dialog is shifting from cloud areas to energy areas.

A number of governments are already investing in nuclear computation hubs — zones that mix small modular reactors with hyperscale information facilities. Others are utilizing federal lands for hybrid initiatives that pair nuclear with gasoline and renewables to fulfill AI’s rising demand for electrical energy. That is solely the start of the story. The true query shouldn’t be whether or not we are able to energy AI, however whether or not our world can sustain with the machines it has created.

Within the subsequent elements of our Powering Knowledge within the Age of AI sequence, we’ll discover how firms are turning to new sources of vitality to maintain their AI ambitions, how the facility grid itself is being reinvented to assume and adapt just like the methods it fuels, and the way information facilities are evolving into the laboratories of contemporary science. We’ll additionally look outward on the race unfolding between the US, China, and different international locations to achieve management over the electrical energy and infrastructure that may drive the following period of intelligence.

Associated Objects

Bloomberg Finds AI Knowledge Facilities Fueling America’s Vitality Invoice Disaster

Our Shared AI Future: Trade, Academia, and Authorities Come Collectively at TPC25

IBM Targets AI Inference with New Power11 Lineup