As people, we study to do new issues, like ballet or boxing (each actions I had the chance to do this summer time!), via trial and error. We enhance by making an attempt issues out, studying from our errors, and listening to steering. I do know this suggestions loop effectively—a part of my intern undertaking for the summer time was educating a reward mannequin to establish higher code fixes to indicate customers, as a part of Databricks’ effort to construct a top-tier Code Assistant.

Nevertheless, my mannequin wasn’t the one one studying via trial and error. Whereas educating my mannequin to differentiate good code fixes from dangerous ones, I realized how you can write strong code, stability latency and high quality considerations for an impactful product, clearly talk to a bigger workforce, and most of all, have enjoyable alongside the best way.

Databricks Assistant Fast Repair

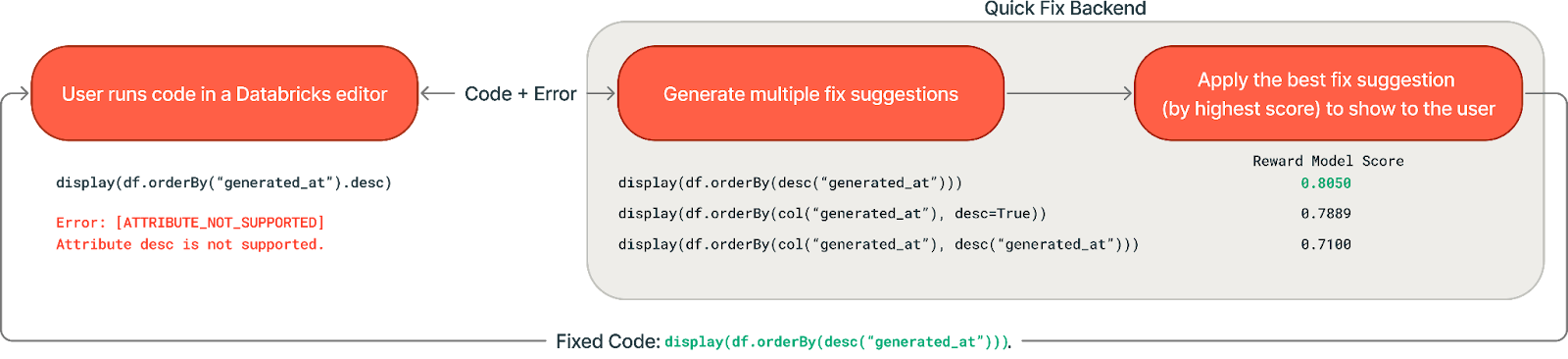

Should you’ve ever written code and tried to run it, solely to get a pesky error, then you definitely would respect Fast Repair. Constructed into Databricks Notebooks and SQL Editors, Fast Repair is designed for high-confidence fixes that may be generated in 1-3 seconds—splendid for syntax errors, misspelled column names, and easy runtime errors. When Fast Repair is triggered, it takes code and an error message, then makes use of an LLM to generate a focused repair to unravel the error.

What downside did my intern undertaking sort out?

Whereas Fast Repair already existed and was serving to Databricks customers repair their code, there have been loads of methods to make it even higher! For instance, after we generate a code repair and do some fundamental checks that it passes syntax conventions, how can we be sure that the repair we find yourself displaying a consumer is essentially the most related and correct? Enter best-of-k sampling—generate a number of doable repair strategies, then use a reward mannequin to decide on the perfect one.

My undertaking construction

My undertaking concerned a mixture of backend implementation and analysis experimentation, which I discovered to be enjoyable and filled with studying.

Producing a number of strategies

I first expanded the Fast Repair backend circulation to generate numerous strategies in parallel utilizing totally different prompts and contexts. I experimented with strategies like including chain-of-thought reasoning, predicted outputs reasoning, system immediate variations, and selective database context to maximise the standard and variety of strategies. We discovered that producing strategies with further reasoning elevated our high quality metrics but additionally induced some latency price.

Selecting the perfect repair suggestion to indicate to the consumer

After a number of strategies are generated, we now have to decide on the perfect one to return. I began by implementing a easy majority voting baseline, which offered the consumer with essentially the most ceaselessly prompt repair—working on the precept {that a} extra generally generated resolution would probably be the simplest. This baseline carried out effectively within the offline evaluations however didn’t carry out considerably higher than the present implementation in on-line consumer A/B testing, so it was not rolled out to manufacturing.

Moreover, I developed reward fashions to rank and choose essentially the most promising strategies. I educated the fashions to foretell which fixes customers would settle for and efficiently execute. We used classical machine studying approaches (logistic regression and gradient boosted choice tree utilizing the LightGBM bundle) and fine-tuned LLMs.

Outcomes and affect

Surprisingly, for the duty of predicting consumer acceptance and execution success of candidate fixes, the classical fashions carried out comparably to the fine-tuned LLMs in offline evaluations. The choice tree mannequin particularly may need carried out effectively as a result of code edits that “look proper” for the sorts of errors that Fast Repair handles are likely to in actual fact be right: the options that turned out to be significantly informative have been the similarity between the unique line of code and the generated repair, in addition to the error kind.

Given this efficiency, we determined to deploy the choice tree (LightGBM) mannequin in manufacturing. One other think about favor of the LightGBM mannequin was its considerably sooner inference time in comparison with the fine-tuned LLM. Velocity is essential for Fast Repair since strategies should seem earlier than the consumer manually edits their code, and any further latency means fewer errors fastened. The small measurement of the LightGBM mannequin made it rather more useful resource environment friendly and simpler to productionize—alongside some mannequin and infrastructure optimizations, we have been in a position to lower our common inference time by nearly 100x.

With the best-of-k strategy and reward mannequin applied, we have been in a position to increase our inner acceptance price, growing high quality for our customers. We have been additionally in a position to maintain our latency inside acceptable bounds of our unique implementation.

If you wish to study extra concerning the Databricks Assistant, try the touchdown web page or the Assistant Fast Repair Announcement.

My Internship Expertise

Databricks tradition in motion

This internship was an unimaginable expertise to contribute on to a high-impact product. I gained firsthand perception into how Databricks’ tradition encourages a robust bias for motion whereas sustaining a excessive bar for system and product high quality.

From the beginning, I observed how clever but humble everybody was. That impression solely grew stronger over time, as I noticed how genuinely supportive the workforce was. Even very senior engineers repeatedly went out of their method to assist me succeed, whether or not by speaking via technical challenges, providing considerate suggestions, or sharing their previous approaches and learnings.

I’d particularly like to present a shoutout to my mentor Will Tipton, my managers Phil Eichmann and Shanshan Zheng, my casual mentors Rishabh Singh and Matt Hayes, the Editor / Assistant workforce, the Utilized AI workforce, and the MosaicML of us for his or her mentorship. I’ve realized invaluable abilities and life classes from them, which I’ll take with me for the remainder of my profession.

The opposite superior interns!

Final however not least, I had a good time attending to know the opposite interns! The recruiting workforce organized many enjoyable occasions that helped us join—considered one of my favorites was the Intern Olympics (pictured beneath). Whether or not it was chatting over lunch, making an attempt out native exercise lessons, or celebrating birthdays with karaoke, I actually appreciated how supportive and close-knit the intern group was, each in and outdoors of labor.

Intern Olympics! Go Workforce 2!

Shout-out to the opposite interns who tried boxing with me!

This summer time taught me that the perfect studying occurs if you’re fixing actual issues with actual constraints—particularly if you’re surrounded by good, pushed, and supportive folks. Essentially the most rewarding a part of my internship wasn’t simply finishing mannequin coaching or presenting attention-grabbing outcomes to the workforce, however realizing that I’ve grown in my capacity to ask higher questions, cause via design trade-offs, and ship a concrete characteristic from begin to end on a platform as extensively used as Databricks.

If you wish to work on cutting-edge tasks with superb teammates, I’d advocate you to use to work at Databricks! Go to the Databricks Careers web page to study extra about job openings throughout the corporate.