Microsoft continues so as to add to the dialog by unveiling its latest fashions, Phi-4-reasoning, Phi-4-reasoning-plus, and Phi-4-mini-reasoning.

A brand new period of AI

One yr in the past, Microsoft launched small language fashions (SLMs) to clients with the discharge of Phi-3 on Azure AI Foundry, leveraging analysis on SLMs to broaden the vary of environment friendly AI fashions and instruments obtainable to clients.

Immediately, we’re excited to introduce Phi-4-reasoning, Phi-4-reasoning-plus, and Phi-4-mini-reasoning—marking a brand new period for small language fashions and as soon as once more redefining what is feasible with small and environment friendly AI.

Reasoning fashions, the following step ahead

Reasoning fashions are educated to leverage inference-time scaling to carry out complicated duties that demand multi-step decomposition and inner reflection. They excel in mathematical reasoning and are rising because the spine of agentic functions with complicated, multi-faceted duties. Such capabilities are sometimes discovered solely in massive frontier fashions. Phi-reasoning fashions introduce a brand new class of small language fashions. Utilizing distillation, reinforcement studying, and high-quality information, these fashions stability dimension and efficiency. They’re sufficiently small for low-latency environments but keep sturdy reasoning capabilities that rival a lot larger fashions. This mix permits even resource-limited gadgets to carry out complicated reasoning duties effectively.

Phi-4-reasoning and Phi-4-reasoning-plus

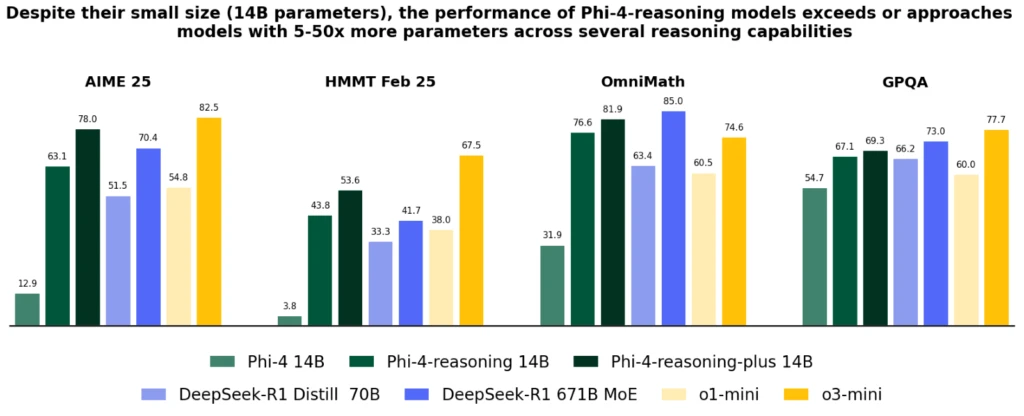

Phi-4-reasoning is a 14-billion parameter open-weight reasoning mannequin that rivals a lot bigger fashions on complicated reasoning duties. Skilled through supervised fine-tuning of Phi-4 on fastidiously curated reasoning demonstrations from OpenAI o3-mini, Phi-4-reasoning generates detailed reasoning chains that successfully leverage extra inference-time compute. The mannequin demonstrates that meticulous information curation and high-quality artificial datasets enable smaller fashions to compete with bigger counterparts.

Phi-4-reasoning-plus builds upon Phi-4-reasoning capabilities, additional educated with reinforcement studying to make the most of extra inference-time compute, utilizing 1.5x extra tokens than Phi-4-reasoning, to ship greater accuracy.

Regardless of their considerably smaller dimension, each fashions obtain higher efficiency than OpenAI o1-mini and DeepSeek-R1-Distill-Llama-70B at most benchmarks, together with mathematical reasoning and Ph.D. stage science questions. They obtain efficiency higher than the total DeepSeek-R1 mannequin (with 671-billion parameters) on the AIME 2025 take a look at, the 2025 qualifier for the USA Math Olympiad. Each fashions can be found on Azure AI Foundry and HuggingFace, right here and right here.

Phi-4-reasoning fashions introduce a serious enchancment over Phi-4, surpass bigger fashions like DeepSeek-R1-Distill-70B and method Deep-Search-R1 throughout varied reasoning and basic capabilities, together with math, coding, algorithmic downside fixing, and planning. The technical report supplies intensive quantitative proof of those enhancements by way of numerous reasoning duties.

Phi-4-mini-reasoning

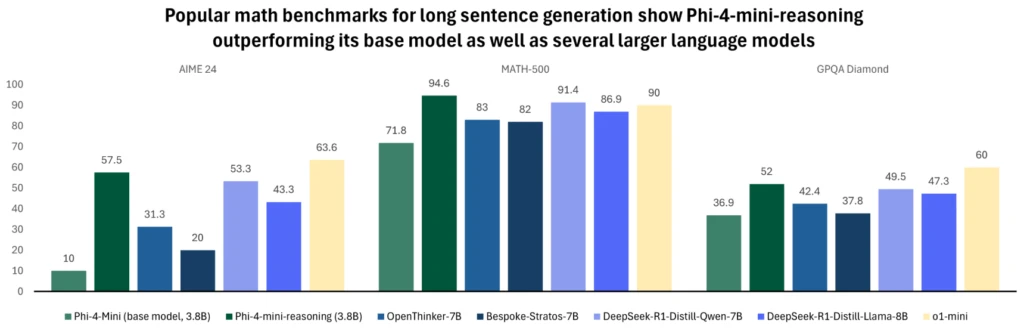

Phi-4-mini-reasoning is designed to fulfill the demand for a compact reasoning mannequin. This transformer-based language mannequin is optimized for mathematical reasoning, offering high-quality, step-by-step downside fixing in environments with constrained computing or latency. Tremendous-tuned with artificial information generated by Deepseek-R1 mannequin, Phi-4-mini-reasoning balances effectivity with superior reasoning capacity. It’s splendid for instructional functions, embedded tutoring, and light-weight deployment on edge or cell methods, and is educated on over a million numerous math issues spanning a number of ranges of issue from center faculty to Ph.D. stage. Check out the mannequin on Azure AI Foundry or HuggingFace as we speak.

For extra details about the mannequin, learn the technical report that gives extra quantitative insights.

Phi’s evolution over the past yr has frequently pushed this envelope of high quality vs. dimension, increasing the household with new options to handle numerous wants. Throughout the size of Home windows 11 gadgets, these fashions can be found to run regionally on CPUs and GPUs.

As Home windows works in the direction of creating a brand new kind of PC, Phi fashions have grow to be an integral a part of Copilot+ PCs with the NPU-optimized Phi Silica variant. This extremely environment friendly and OS-managed model of Phi is designed to be preloaded in reminiscence, and obtainable with blazing quick time to first token responses, and energy environment friendly token throughput so it may be concurrently invoked with different functions operating in your PC.

It’s utilized in core experiences like Click on to Do, offering helpful textual content intelligence instruments for any content material in your display, and is accessible as developer APIs to be readily built-in into functions—already being utilized in a number of productiveness functions like Outlook, providing its Copilot abstract options offline. These small however mighty fashions have already been optimized and built-in for use throughout a number of functions throughout the breadth of our PC ecosystem. The Phi-4-reasoning and Phi-4-mini-reasoning fashions leverage the low-bit optimizations for Phi Silica and might be obtainable to run quickly on Copilot+ PC NPUs.

Replace: Might 15, 2025: Immediately, we’re happy to announce that the Phi-4-reasoning and Phi-4-mini-reasoning fashions optimized utilizing ONNX are actually obtainable to make use of in your Snapdragon powered Copilot+ PCs. By offloading these fashions to the Neural Processing Unit (NPU), inference-time compute consumes considerably much less energy. This enables reasoning fashions similar to Phi-4 make the most of inference-time compute for greater accuracy extra effectively. Get began as we speak by downloading the AI Toolkit extension in VS Code.

Security and Microsoft’s method to accountable AI

At Microsoft, accountable AI is a elementary precept guiding the event and deployment of AI methods, together with our Phi fashions. Phi fashions are developed in accordance with Microsoft AI rules: accountability, transparency, equity, reliability and security, privateness and safety, and inclusiveness.

The Phi household of fashions has adopted a sturdy security post-training method, leveraging a mix of Supervised Tremendous-Tuning (SFT), Direct Desire Optimization (DPO), and Reinforcement Studying from Human Suggestions (RLHF) methods. These strategies make the most of varied datasets, together with publicly obtainable datasets centered on helpfulness and harmlessness, in addition to varied safety-related questions and solutions. Whereas the Phi household of fashions is designed to carry out a variety of duties successfully, you will need to acknowledge that each one AI fashions might exhibit limitations. To higher perceive these limitations and the measures in place to handle them, please confer with the mannequin playing cards under, which offer detailed data on accountable AI practices and tips.

Be taught extra right here: