Think about an AI that doesn’t simply reply your questions, however thinks forward, breaks duties down, creates its personal TODOs, and even spawns sub-agents to get the work finished. That’s the promise of Deep Brokers. AI Brokers already take the capabilities of LLMs a notch increased, and right now we’ll have a look at Deep Brokers to see how they’ll push that notch even additional. Deep Brokers is constructed on high of LangGraph, a library designed particularly to create brokers able to dealing with advanced duties. Let’s take a deeper have a look at Deep Brokers, perceive their core capabilities, after which use the library to construct our personal AI brokers.

Deep Brokers

LangGraph provides you a graph-based runtime for stateful workflows, however you continue to have to construct your individual planning, context administration, or task-decomposition logic from scratch. DeepAgents (constructed on high of LangGraph) bundles planning instruments, digital file-system based mostly reminiscence and subagent orchestration out of the field.

You should utilize DeepAgents by way of the standalone deepagents library. It consists of planning capabilities, can spawn sub-agents, and makes use of a filesystem for context administration. It will also be paired with LangSmith for deployment and monitoring. The brokers constructed right here use the “claude-sonnet-4-5-20250929” mannequin by default, however this may be custom-made. Earlier than we begin creating the brokers, let’s perceive the core parts.

Core Elements

- Detailed System Prompts – The Deep agent makes use of a system immediate with detailed directions and examples.

- Planning Instruments – Deep brokers have a built-in software for Planning, the TODO record administration software is utilized by the brokers for a similar. This helps them keep targeted even whereas performing a fancy activity.

- Sub-Brokers – Subagent spawns for the delegated duties they usually execute in context isolation.

- File System – Digital filesystem for context administration and reminiscence administration, AI Brokers right here use information as a software to dump context to reminiscence when the context window is full.

Constructing a Deep Agent

Now let’s construct a analysis agent utilizing the ‘deepagents’ library which can use tavily for websearch and it’ll have all of the parts of a deep agent.

Be aware: We’ll be doing the tutorial in Google Colab.

Pre-requisites

You’ll want an OpenAI key for this agent that we’ll be creating, you may select to make use of a distinct mannequin supplier like Gemini/Claude as effectively. Get your OpenAI key from the platform: https://platform.openai.com/api-keys

Additionally get a Tavily API key for websearch from right here: https://app.tavily.com/dwelling

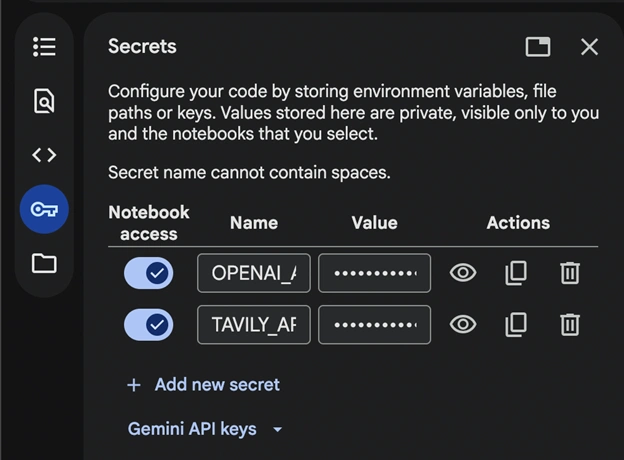

Open a brand new pocket book in Google Colab and add the key keys:

Save the keys as OPENAI_API_KEY, TAVILY_API_KEY for the demo and don’t neglect to activate the pocket book entry.

Additionally Learn: Gemini API File Search: The Straightforward Strategy to Construct RAG

Necessities

!pip set up deepagents tavily-python langchain-openai We’ll set up these libraries wanted to run the code.

Imports and API Setup

import os

from deepagents import create_deep_agent

from tavily import TavilyClient

from langchain.chat_models import init_chat_model

from google.colab import userdata

# Set API keys

TAVILY_API_KEY=userdata.get("TAVILY_API_KEY")

os.environ["OPENAI_API_KEY"]=userdata.get("OPENAI_API_KEY") We’re storing the Tavily API in a variable and the OpenAI API within the setting.

Defining the Instruments, Sub-Agent and the Agent

# Initialize Tavily consumer

tavily_client = TavilyClient(api_key=TAVILY_API_KEY)

# Outline internet search software

def internet_search(question: str, max_results: int = 5) -> str:

"""Run an internet search to search out present info"""

outcomes = tavily_client.search(question, max_results=max_results)

return outcomes

# Outline a specialised analysis sub-agent

research_subagent = {

"title": "data-analyzer",

"description": "Specialised agent for analyzing information and creating detailed experiences",

"system_prompt": """You're an professional information analyst and report author.

Analyze info totally and create well-structured, detailed experiences.""",

"instruments": [internet_search],

"mannequin": "openai:gpt-4o",

}

# Initialize GPT-4o-mini mannequin

mannequin = init_chat_model("openai:gpt-4o-mini")

# Create the deep agent

# The agent mechanically has entry to: write_todos, read_todos, ls, read_file,

# write_file, edit_file, glob, grep, and activity (for subagents)

agent = create_deep_agent(

mannequin=mannequin,

instruments=[internet_search], # Passing the software

system_prompt="""You're a thorough analysis assistant. For this activity:

1. Use write_todos to create a activity record breaking down the analysis

2. Use internet_search to collect present info

3. Use write_file to save lots of your findings to /research_findings.md

4. You may delegate detailed evaluation to the data-analyzer subagent utilizing the duty software

5. Create a remaining complete report and reserve it to /final_report.md

6. Use read_todos to verify your progress

Be systematic and thorough in your analysis.""",

subagents=[research_subagent],

) We have now outlined a software for websearch and handed the identical to our agent. We’re utilizing OpenAI’s ‘gpt-4o-mini’ for this demo. You may change this to any mannequin.

Additionally be aware that we didn’t create any information or outline something for the file system wanted for offloading context and the todo record. These are already pre-built in ‘create_deep_agent()’ and it has entry to them.

Working Inference

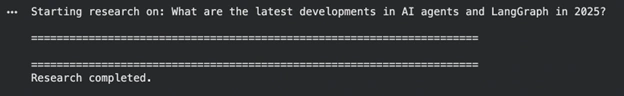

# Analysis question

research_topic = "What are the newest developments in AI brokers and LangGraph in 2025?"

print(f"Beginning analysis on: {research_topic}n")

print("=" * 70)

# Execute the agent

end result = agent.invoke({

"messages": [{"role": "user", "content": research_topic}]

})

print("n" + "=" * 70)

print("Analysis accomplished.n")

Be aware: The agent execution may take some time.

Viewing the Output

# Agent execution hint

print("AGENT EXECUTION TRACE:")

print("-" * 70)

for i, msg in enumerate(end result["messages"]):

if hasattr(msg, 'sort'):

print(f"n[{i}] Kind: {msg.sort}")

if msg.sort == "human":

print(f"Human: {msg.content material}")

elif msg.sort == "ai":

if hasattr(msg, 'tool_calls') and msg.tool_calls:

print(f"AI software calls: {[tc['name'] for tc in msg.tool_calls]}")

if msg.content material:

print(f"AI: {msg.content material[:200]}...")

elif msg.sort == "software":

print(f"Device '{msg.title}' end result: {str(msg.content material)[:200]}...")

# Closing AI response

print("n" + "=" * 70)

final_message = end result["messages"][-1]

print("FINAL RESPONSE:")

print("-" * 70)

print(final_message.content material)

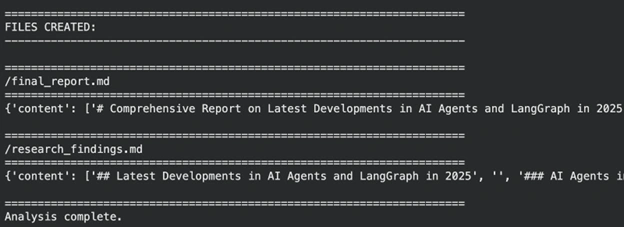

# Information created

print("n" + "=" * 70)

print("FILES CREATED:")

print("-" * 70)

if "information" in end result and end result["files"]:

for filepath in sorted(end result["files"].keys()):

content material = end result["files"][filepath]

print(f"n{'=' * 70}")

print(f"{filepath}")

print(f"{'=' * 70}")

print(content material)

else:

print("No information discovered.")

print("n" + "=" * 70)

print("Evaluation full.")

As we are able to see the agent did a great job, it maintained a digital file system, gave a response after a number of iterations and thought it must be a ‘deep-agent’. However there may be scope for enchancment in our system, let’s have a look at them within the subsequent system.

Potential Enhancements in our Agent

We constructed a easy deep agent, however you may problem your self and construct one thing significantly better. Listed here are few issues you are able to do to enhance this agent:

- Use Lengthy-term Reminiscence – The deep-agent can protect consumer preferences and suggestions in information (/recollections/). This may assist the agent give higher solutions and construct a data base from the conversations.

- Management File-system – By default the information are saved in a digital state, you may this to completely different backend or native disk utilizing the ‘FilesystemBackend’ from deepagents.backends

- By refining the system prompts – You may take a look at out a number of prompts to see which works the perfect for you.

Conclusion

We have now efficiently constructed our Deep Brokers and may now see how AI Brokers can push LLM capabilities a notch increased, utilizing LangGraph to deal with the duties. With built-in planning, sub-agents, and a digital file system, they handle TODOs, context, and analysis workflows easily. Deep Brokers are nice but additionally do not forget that if a activity is easier and will be achieved by a easy agent or LLM then it’s not really helpful to make use of them.

Often Requested Questions

A. Sure. As a substitute of Tavily, you may combine SerpAPI, Firecrawl, Bing Search, or every other internet search API. Merely change the search perform and gear definition to match the brand new supplier’s response format and authentication technique.

A. Completely. Deep Brokers are model-agnostic, so you may change to Claude, Gemini, or different OpenAI fashions by modifying the mannequin parameter. This flexibility ensures you may optimize efficiency, price, or latency relying in your use case.

A. No. Deep Brokers mechanically present a digital filesystem for managing reminiscence, information, and lengthy contexts. This eliminates the necessity for guide setup, though you may configure customized storage backends if required.

A. Sure. You may create a number of sub-agents, every with its personal instruments, system prompts, and capabilities. This enables the primary agent to delegate work extra successfully and deal with advanced workflows by modular, distributed reasoning.

Login to proceed studying and luxuriate in expert-curated content material.